●Pacemaker、Corosync、Stonithについて

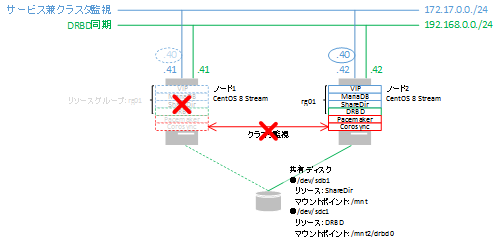

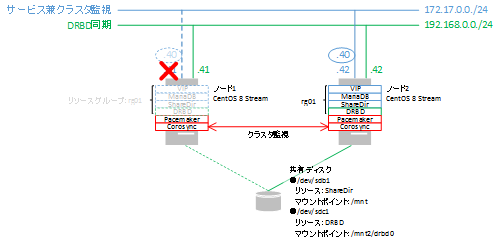

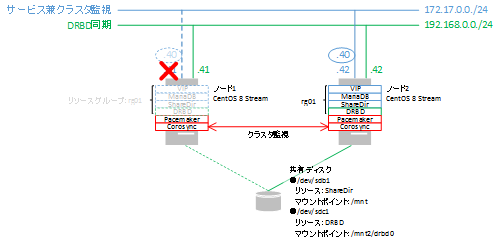

●VMware ESXi 6.7上でCentOS 7 + Pacemaker + Corosyncの設定に挑戦

参考URL:CentOS 7 + Pacemaker + CorosyncでMariaDBをクラスタ化する① (準備・インストール編)

参考URL:Pacemakerの概要

参考URL:VMware ESXiに仮想共有ディスクファイルを作成する

参考URL:

参考URL:【ざっくり概要】Linuxファイルシステムの種類や作成方法まとめ!

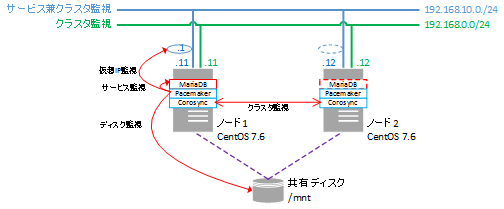

ノードの準備

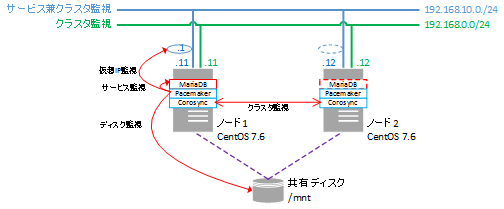

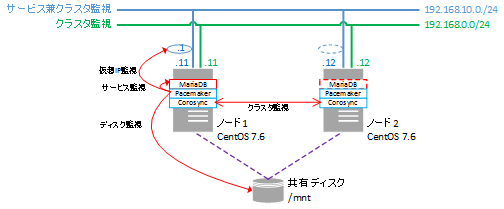

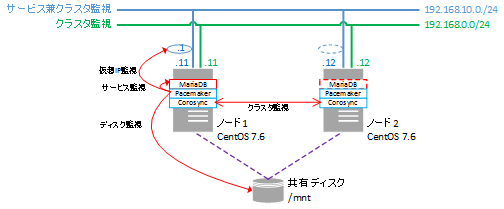

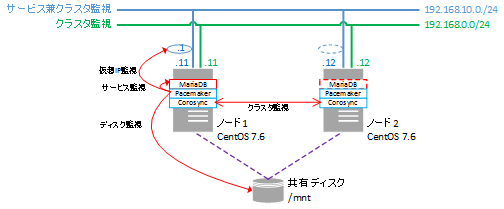

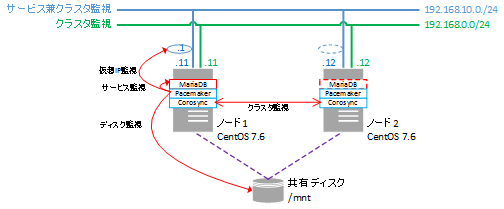

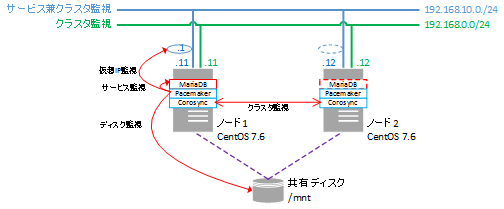

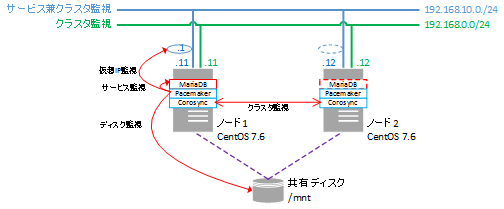

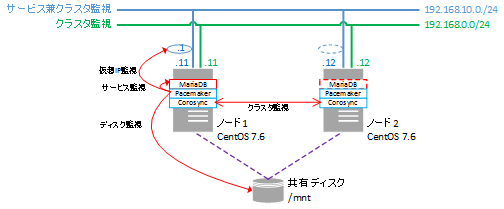

ノード(centos7-1、centos7-2)を準備します。

今回はCentOS 7のカーネルバージョン3.10.0-957.21.3.el7.x86_64でノードを構築します。

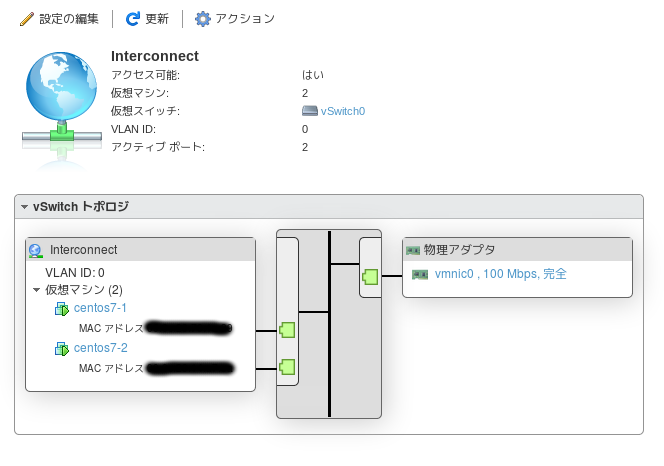

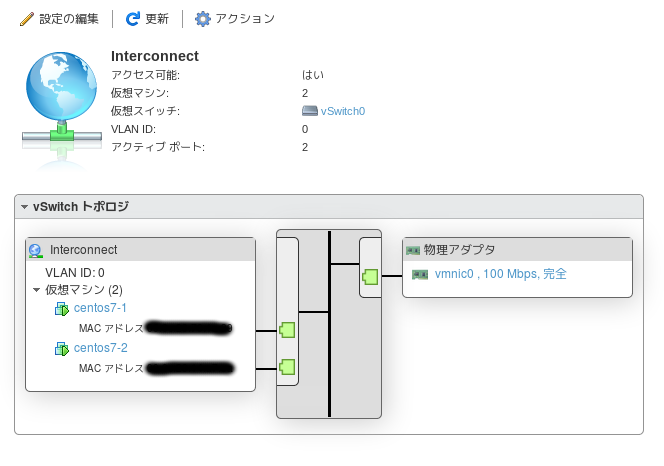

VMware Host Clientにログインし、インターコネクト用のネットワーク(名称:Interconnect)を作成します。

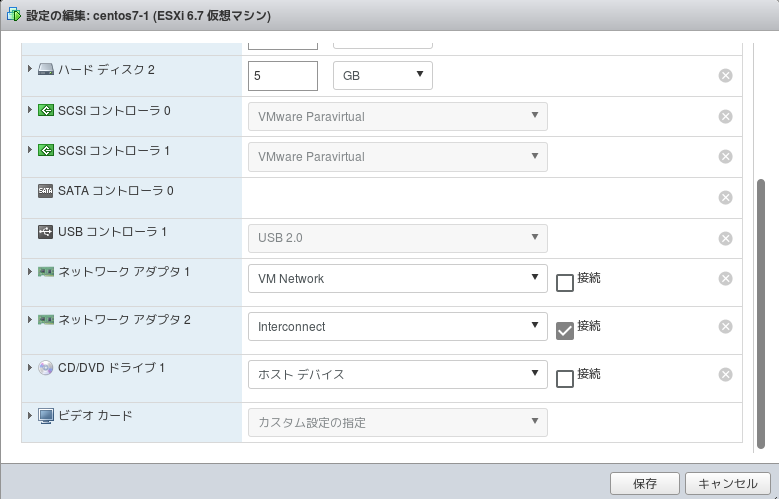

各ノードでインターコネクト用のNIC(ネットワークアダプタ2)を作成します。

このNICにはPacemakerで使用するインターコネクト用のIPアドレスを割り当てます(例:centos7-1:192.168.0.11、centos7-2:192.168.0.12)。

SELinux及びFirewalldについて操作が不慣れな場合、ノードのSELinux及びFirewalldは無効にしておくことをお薦めします。

共有ディスクの作成

共有ストレージをベースとした仮想ディスクは、VMFSデータストア上でEagerZeroedThickオプションを使用して作成する必要があります。

これらの操作は、コンソール上で、vmkfstoolsコマンドを実行する方法とユーザインターフェースとしてVMware Host Clientを使用する方法があります。

ここでは下記の条件で仮想共有ディスクファイルを作成します。

コンソールより仮想共有ディスクファイルを作成方法

コンソールより仮想共有ディスクファイルを作成するには、下記のようにします。

VMware Host Clientによる仮想共有ディスクファイルの作成方法

下記は同じ(一つの)仮想ディスクを2台の仮想ホストによりSCSIで共有させる(いずれかの仮想ホストからのみマウント)時に実施した方法です。

DRBDを構築する場合、各仮想ホストで同サイズの仮想ディスクを作成し、各仮想ホストに接続する必要がありますので、各仮想ホストで仮想ディスクを新規作成してください。

事前にディレクトリ下記のディレクトリを作成します。

起動後、各ノード(centos7-1、centos7-2)からディスクのデバイスを見ることができるか確認します。

パーティションの設定

デバイスが認識されましたので、ファイルシステムの作成及びパーティションを設定します。

●Pacemaker及びCorosyncのインストール・初期設定(CentOS Stream 8)

参考URL:CentOS 8 + PacemakerでSquidとUnboundを冗長化する

参考URL:CentOS 8.1 (1911)でPacemaker / Corosyncが利用可能に

CentOS 7の場合は「 Pacemaker及びCorosyncのインストール・初期設定 」以降を参照してください。

CentOS 8からクラスタソフトのリポジトリが変更されたようです。

クラスタ用ユーザを設定する。yumでインストールすると、「hacluster」ユーザが作成されているはずなので確認します。

クラスタサービスを利用できるようファイアーウォールを設定します。

※片方のノードで実施

Pacemaker・Corosyncのクラスタを作成(CentOS Stream 8)

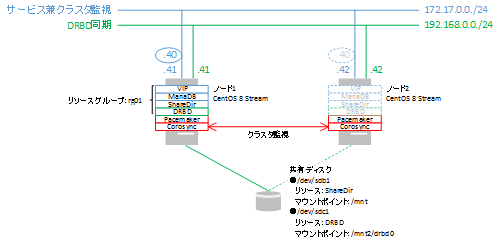

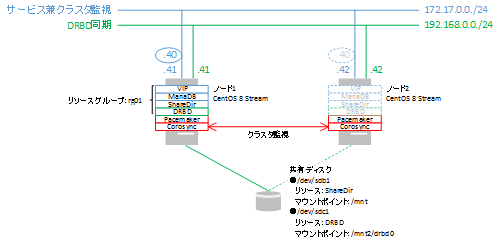

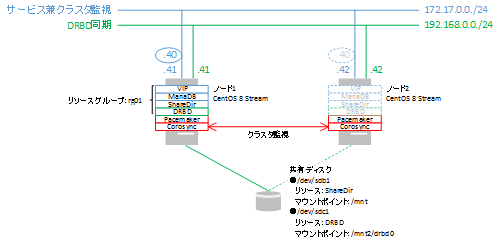

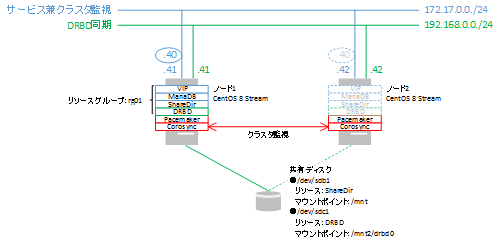

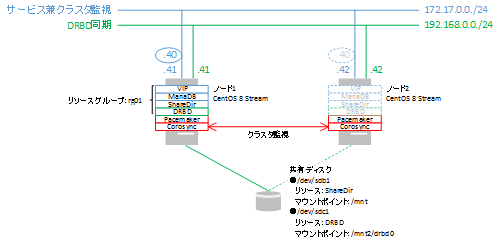

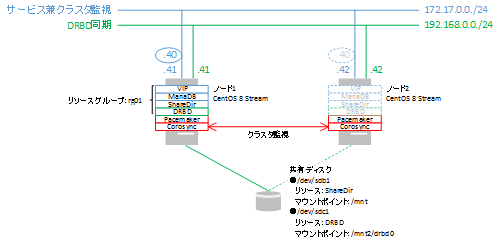

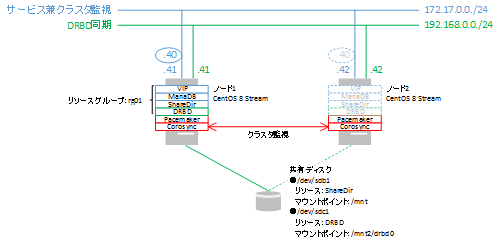

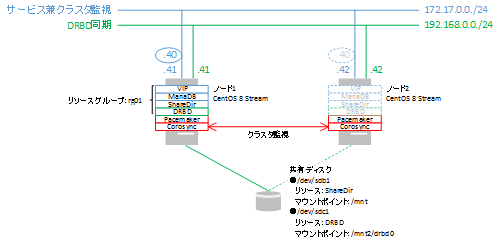

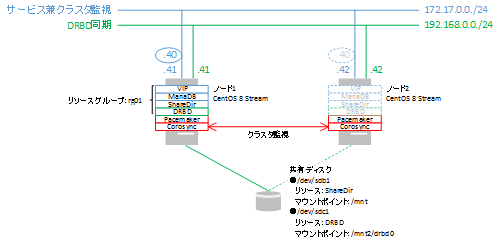

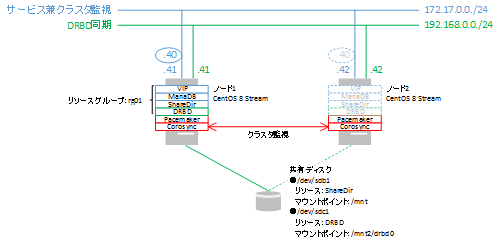

172.17.0/24側に仮想IPを設定するため、下記のように実行しました。

クラスタの管理通信の確認は以下コマンドで実施します。2つのリンクが設定されていることがわかります。

クラスタのプロパティを設定(CentOS Stream 8)

参考URL:Pacemaker/Corosync の設定値について

検証目的ですので、STONITHは無効化します。クラスタの設定として、STONITHの無効化を行い、no-quorum-policyをignoreに設定します。

本来、スプリットブレイン発生時の対策として、STONITHは有効化するべきです。

no-quorum-policyについて、通常クォーラムは多数決の原理でアクティブなノードを決定する仕組みですが、2台構成のクラスタの場合は多数決による決定ができません。

この場合、クォーラム設定は「ignore」に設定するのがセオリーのようです。

この時、スプリットブレインが発生したとしても、各ノードのリソースは特に何も制御されないという設定となるため、STONITHによって片方のノードを強制的に電源を落として対応することになります。

リソースエージェントを使ってリソースを構成する(CentOS Stream 8)

参考URL:動かして理解するPacemaker ~CRM設定編~ その2

リソースを制御するために、リソースエージェントを利用します。リソースエージェントとは、クラスタソフトで用意されているリソースの起動・監視・停止を制御するためのスクリプトとなります。

今回の構成では、以下のリソースエージェントを利用します。

起動:「仮想IPアドレス」→「ファイルシステム」→「MariaDB」

停止:「MariaDB」→「ファイルシステム」→「仮想IPアドレス」

この順序を制御するために、リソースの順序を付けてグループ化した「リソースグループ」を作成します。

クラスタの起動(CentOS Stream 8)

クラスタを起動します。

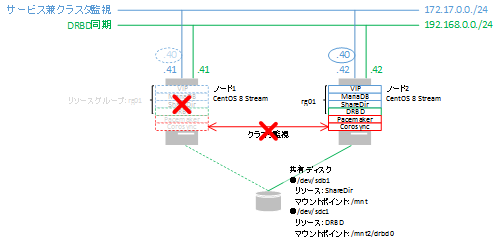

手動フェールオーバーその1(CentOS Stream 8)

手動でフェールオーバーさせるために、わざわざサーバーを再起動させるのは面倒なので、コマンドでリソースグループをフェールオーバーさせます。

手動フェールオーバーその2(CentOS Stream 8)

コマンドでフェールオーバーさせる手順以外にも、ノードをスタンバイにすることで強制的にリソースグループを移動させることもできます。

サーバーダウン障害(CentOS Stream 8)

VMware Host Clientにて、仮想マシンの再起動を実施します。

リソースグループrg01が移動していることが分かります。

このままではクラスタとして稼働しませんので、元の状態にするためにクラスタに組み込みます。

NIC障害(CentOS Stream 8)

参考URL:6.6. リソースの動作

VIPの監視にon-fail=standbyのオプションを指定しないとうまく切り替わらないので、あらかじめ設定しておきます。

切断するNIC(チェックを外します。上手く動作しない場合、完全に削除します。)は仮想IPアドレスが割り当てられるネットワークに属するNICアダプタです。

1分程度でリソースVirtualIPがFAILEDステータスになり、リソースグループがフェールオーバーされます。

その後、障害ノードを復旧させ、クラスタに組み込むために以下コマンドを実行します。

どうやらもともと稼働していたノードのスコアがINFINITYのままになるため、フェイルバックするようです。

この辺りをきちんと制御する意味でも、STONITHの設定を有効にし、障害が発生したノードは強制停止する設定をした方がよいかもしれません。

●PacemakerでZabbix-Serverを制御する(DRBD利用)(CentOS Stream 8)

参考URL:Pacemaker/Corosync の設定値について

参考URL:CentOS8でZabbix5をインストールし、Zabbix Agentを監視する

参考URL:HAクラスタをDRBDとPacemakerで作ってみよう [Pacemaker編]

※この方法でPacemakerを構成した場合、シャットダウンする前に下記を実施してください。

1.スタンバイ側のクラスタ機能の停止

2.Zabbixサーバの停止

3.MariaDBの停止

4.シャットダウンの実施

または

1.Pacemakerの停止

2.シャットダウンの実施

必要なソフトをインストールします(インストール済みの場合、該当作業は不要です)。

Paeemakerで制御されるZabbixで使用するMariaDBのデータベースディレクトリをDRBDを使用してマウントされているディレクトリ上に保存しています。

このDRBDもPacemakerにより制御されます。

DRBDの構築方法については「DRBDの構築について」を参照してください。

MariaDBのデータベースディレクトリの変更方法については「MariaDB(MySQL)のデータベースのフォルダを変更するには(pacemaker、DRBD対応)」を参照してください。

特に記載がない場合、2ホストとも同じ作業を行います。

特定のホストでのみ行う作業は、プロンプトにホスト名を記載します。

DRBDは設定済みとします。

DRBDは自動フェールバックを無効としておかないとDRDBでスプリットブレインが発生し、2台ともStandAlone状態となってしまう場合があります。

この対策のため、自動フェールバックを無効とします(Warningは無視します)。

ファイアーウォールを動作させている場合、各ソフトで使用するポート(http用ポート:80、Zabbix用ポート:10051)を開けてください。

MariaDBは「MariaDB(MySQL)のデータベースのフォルダを変更するには(pacemaker、DRBD対応)」により設定済みとします。

必要なソフトをインストールします(インストール済みの場合、該当作業は不要です)。

Zabbixのデータベース設定(CentOS Stream 8)

MariaDBにログインする。

firewalldの設定

http,Zabbix Agentの通信を許可するために下記のコマンドを実行する。

Zabbixサービス起動

centos8-str1でzabbix-server等を起動します。

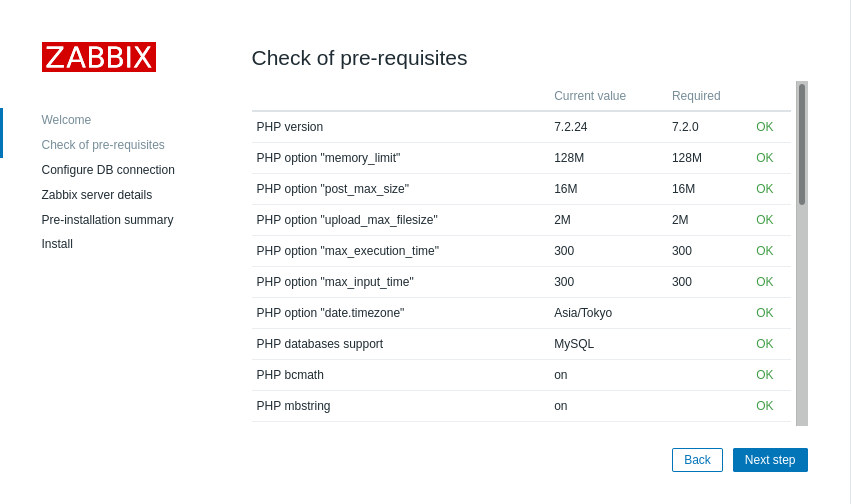

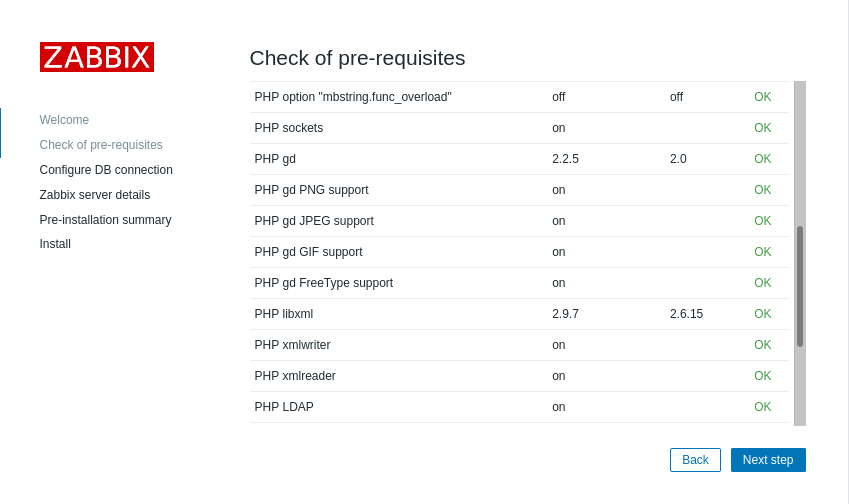

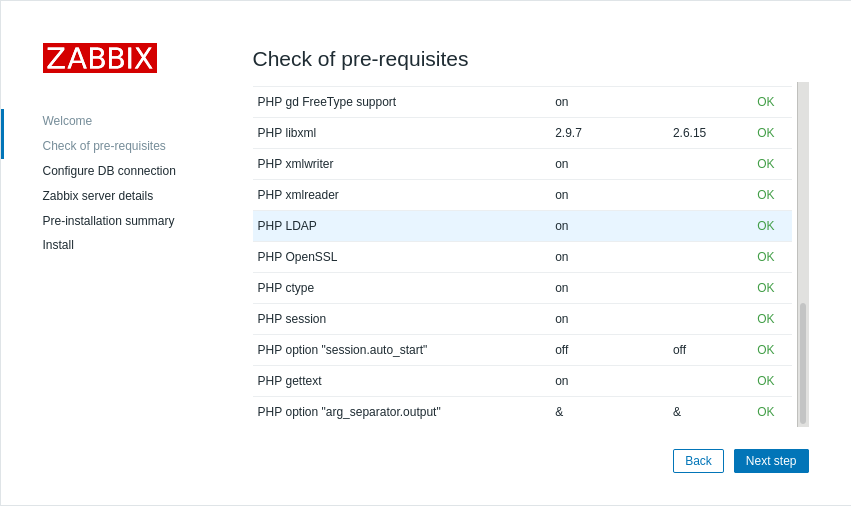

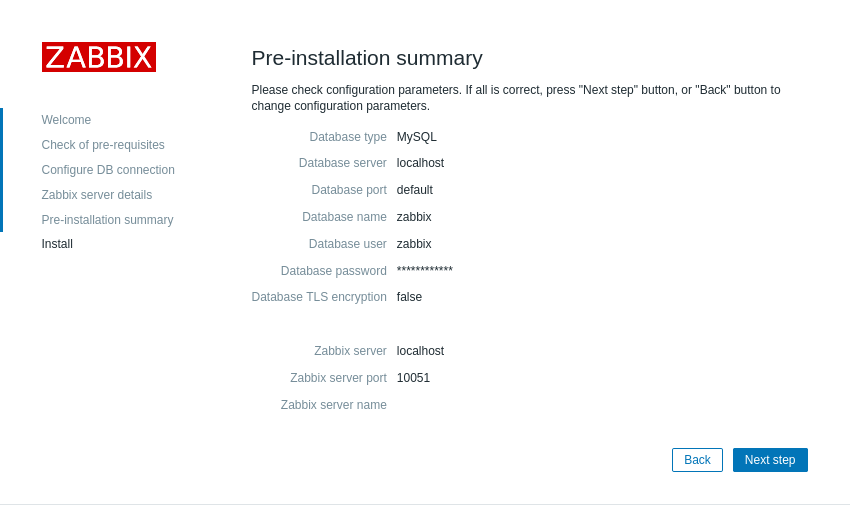

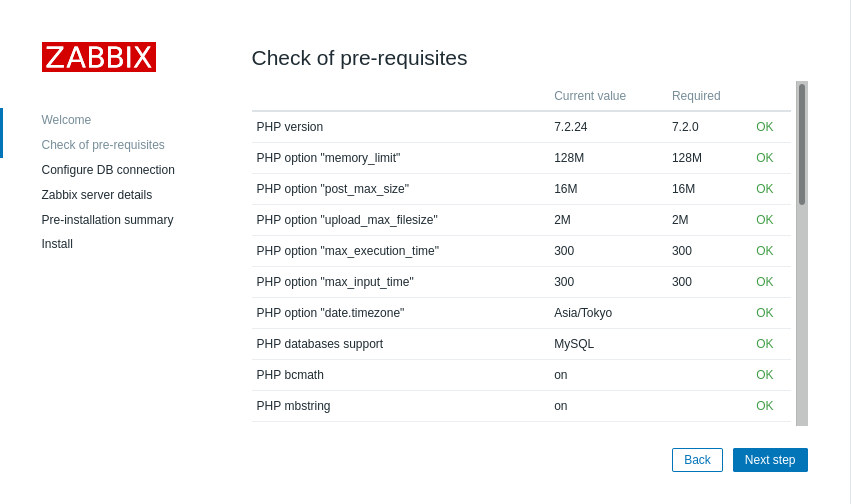

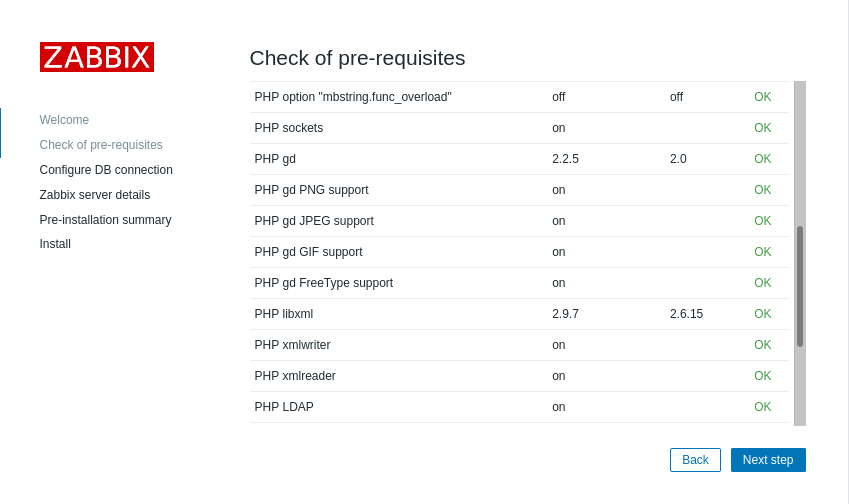

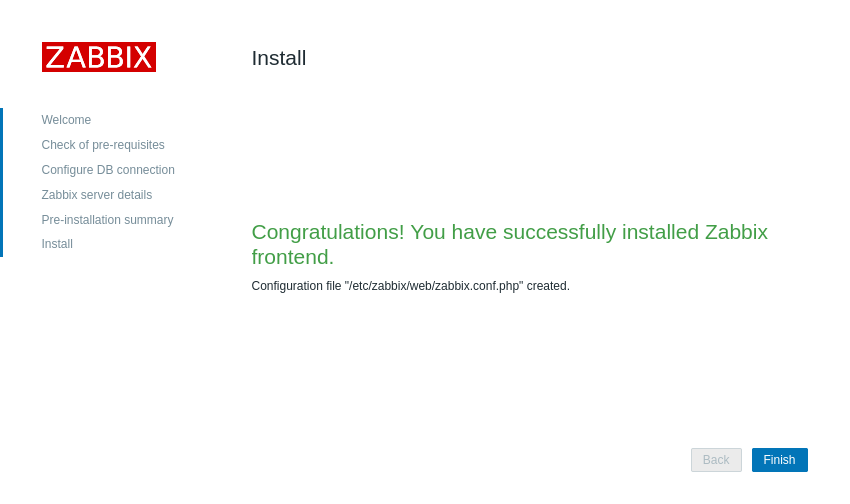

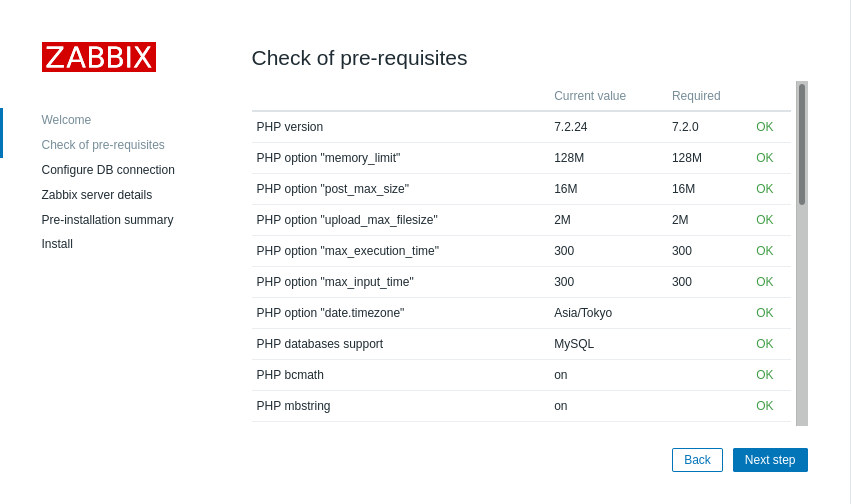

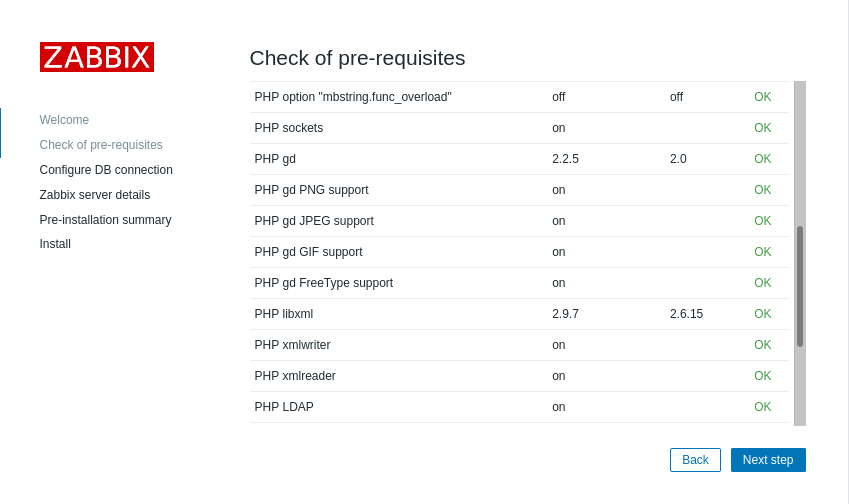

Zabbix 5.4インストール時の初期設定画面はこちらです。

Next stepを押下します。

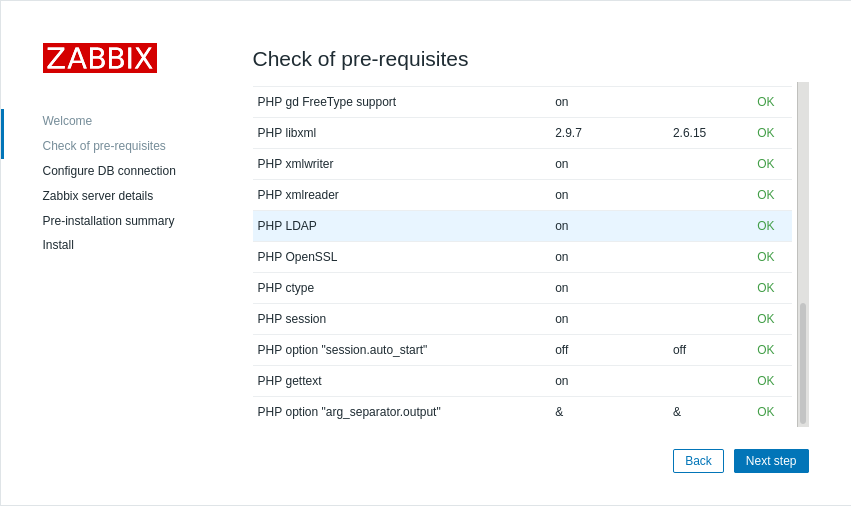

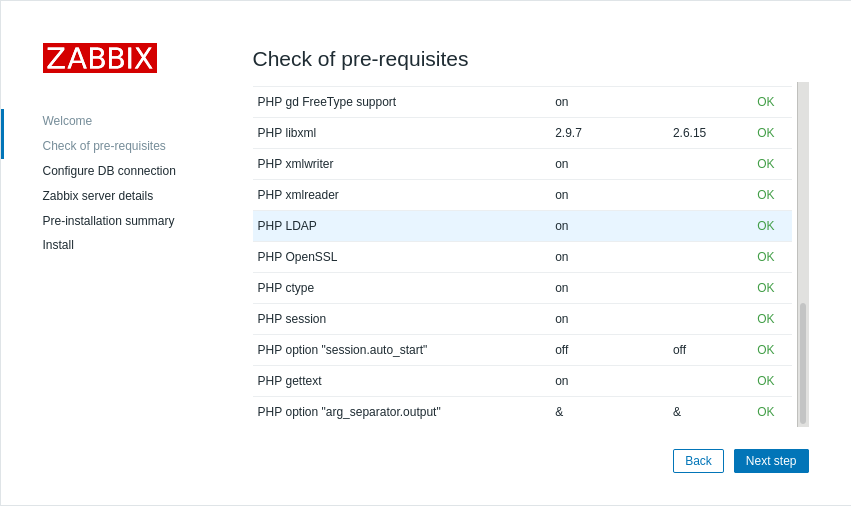

全ての項目が「OK」となっていることを確認してください。

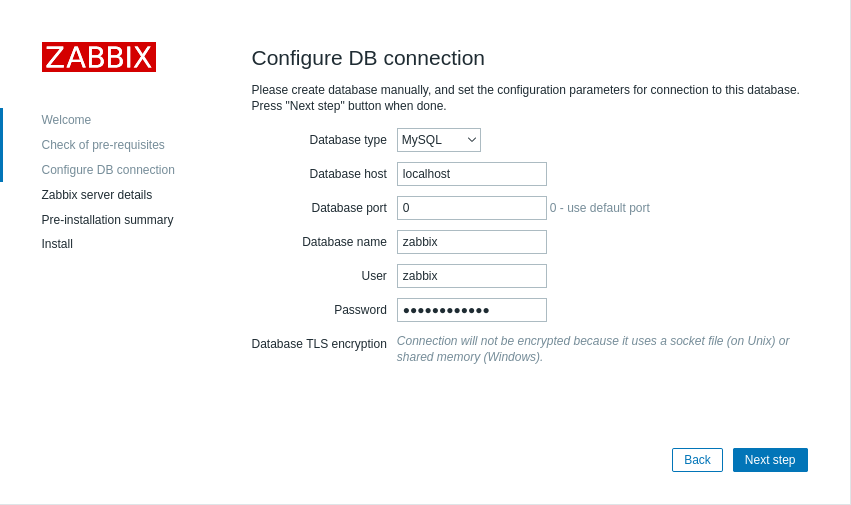

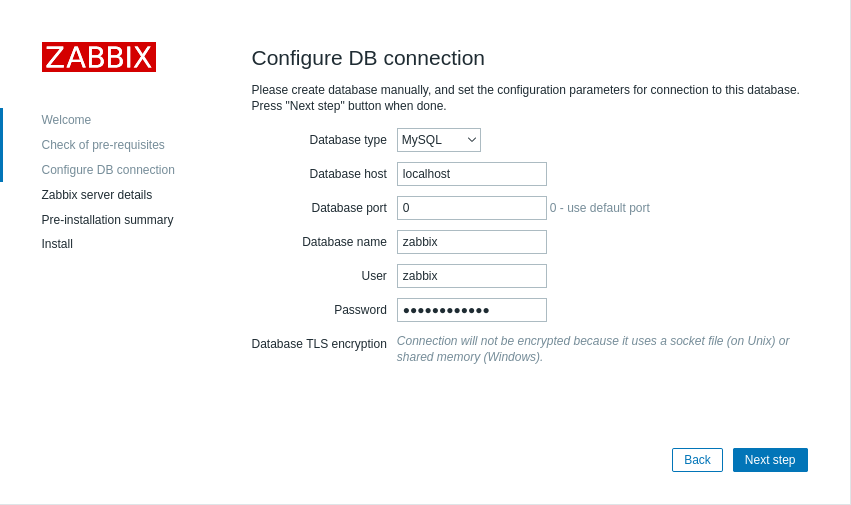

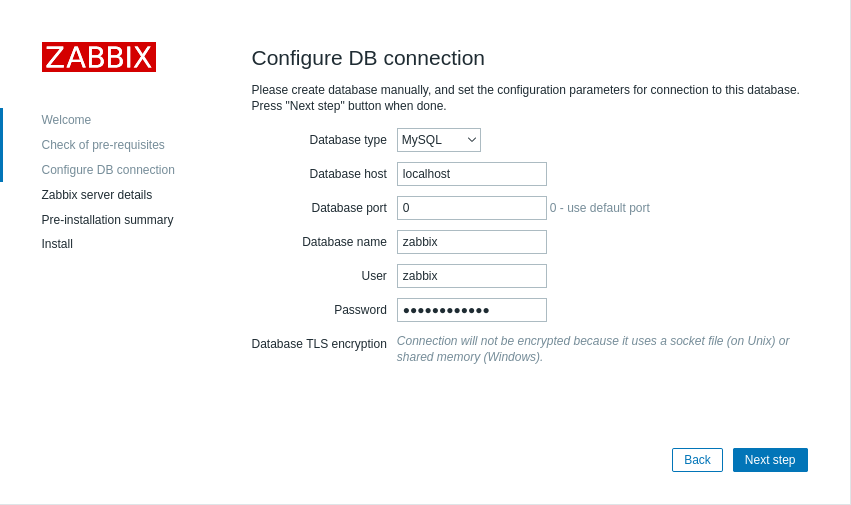

/etc/zabbix/zabbix_server.confで設定した値を入力します。

portは0のままで構いません。

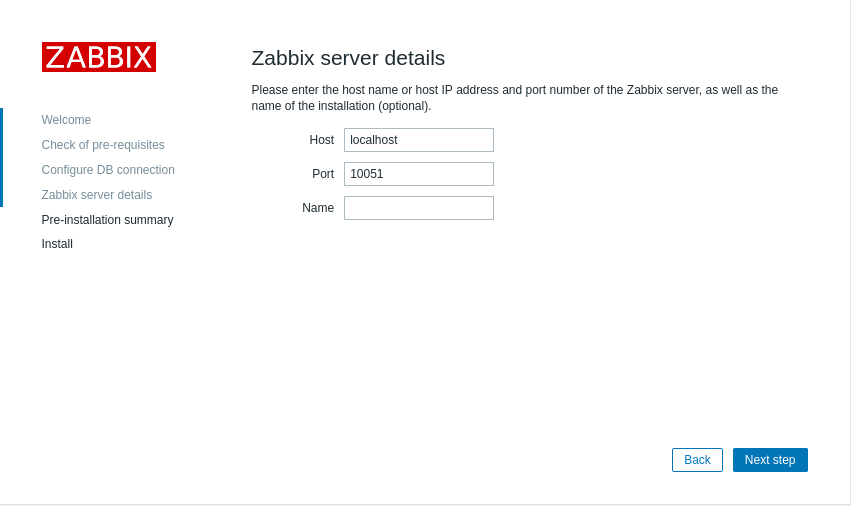

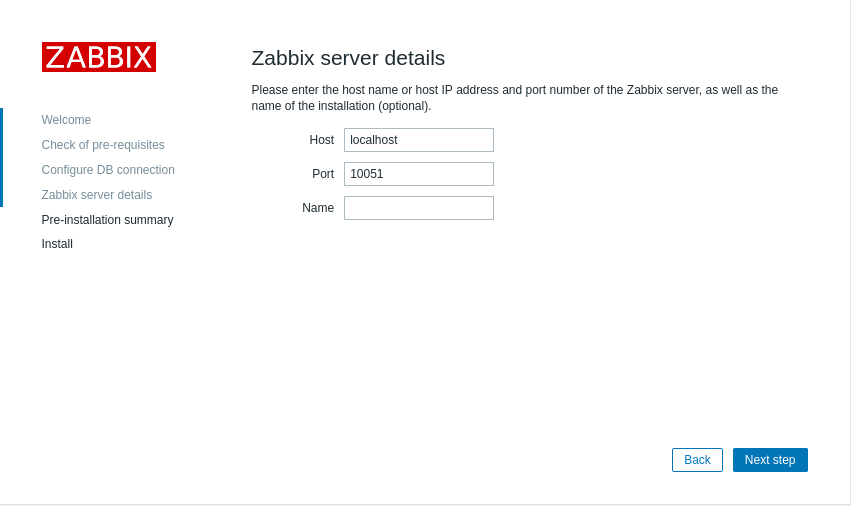

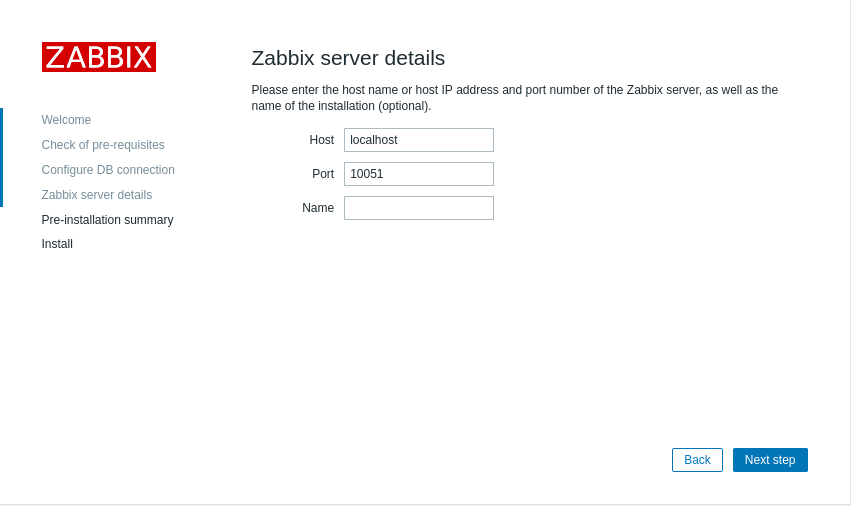

下記ウィンドウではデフォルトのまま次に進みます。

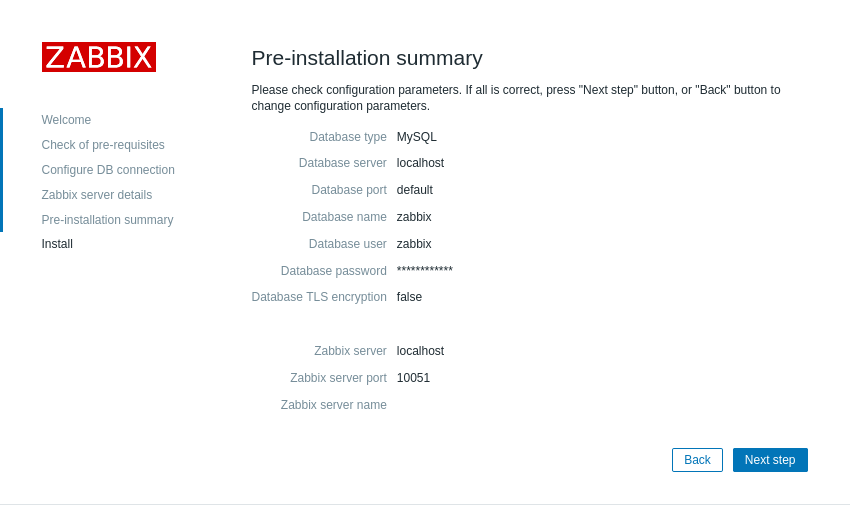

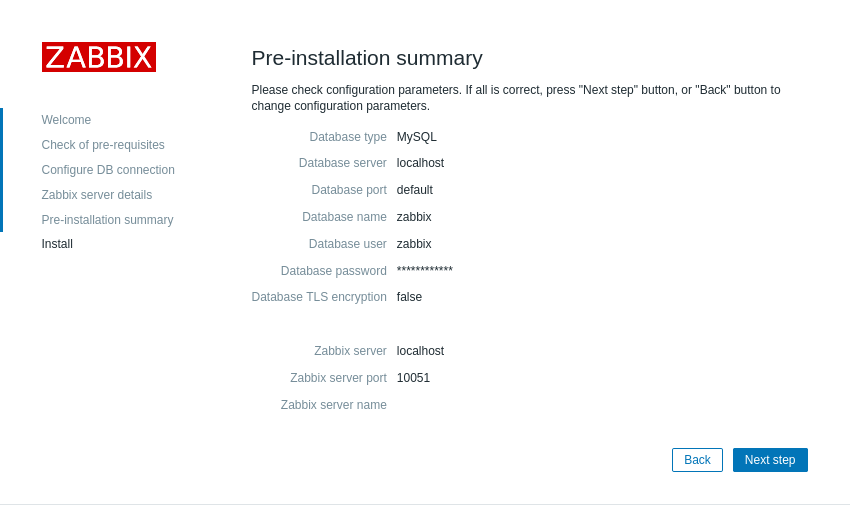

設定情報を確認し、問題なければ次に進みます。

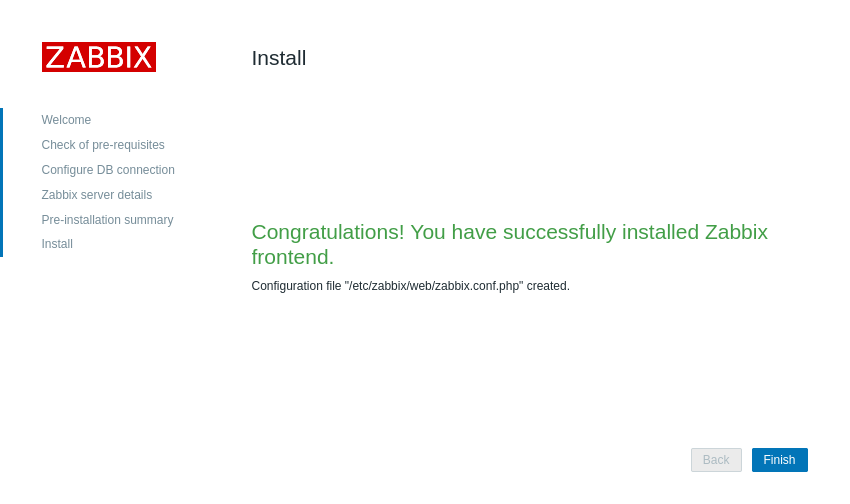

無事設定が完了しました。

デフォルトのユーザ名とパスワードは下記のとおりです。

セットアップ完了後にウィザードによって作成された /etc/zabbix/web/zabbix.conf.php は、centos8-str2にもコピーしておく必要があります。

設定済みのリソースグループ rg01 に追加するので、クラスタ(pcsd)を停止する必要はありません。

●pacemakerの現在のリソースを破棄し、新たな順序でリソースを作成する(CentOS Stream 8)

参考URL:Pacemaker/Corosync の設定値について

pacemakerのリソースを下記のような順序で起動するように(停止はその逆)再設定します。

新たな順序でリソースを作成するため、現在設定されているリソースを削除します。

また、1回の障害検知でフェールオーバーするように設定しています。

プロセスを停止した場合(Apache)(CentOS Stream 8)

参考URL:HAクラスタをDRBDとPacemakerで作ってみよう [Pacemaker編]

httpdを停止します。

fail-countがmigration-thresholdに到達すると、リソースは他のノードで起動します。

今回は制約を設定していますので、原因となるリソース以外も他のノードにフェールオーバーすることになります。

一度、このfail-countをリセットします。

リソースをクリーンアップして綺麗な状態にしておきます。

プロセスを停止した場合(MariaDB)(CentOS Stream 8)

参考URL:HAクラスタをDRBDとPacemakerで作ってみよう [Pacemaker編]

1回でもfail-countがカウントされるとフェールオーバーするように設定していない場合、migration-thresholdのデフォルト値(migration-threshold=1000000)を変更してフェールオーバーするようにします。

MariaDBを停止します。

エラーをクリーンアップし、フェールバックさせます(下記は正常にフェールバックしないパターンです(2021.03.30現在)。)。

下記コマンドを実行しましたが、暫くしても反応がないため Ctrl + C で抜けました。

とりあえず記録されているFailed Resource Actionsをクリーンアップします。

Zabbix ServerがFAILED centos8-str2 (blocked)となる前(timeout前)に、残存しているZabbix Serverのプロセスを全てkillすることでリソースが移動できることを確認しました。

2021.03.31現在、Pacemaker単独でこれを解決する方法が見つからなかったため、cronで1分毎にMariaDBのプロセスがなくなったこと(MariaDBの停止)を検知したら、強制的にZabbix Serverのプロセスを全てkillする方法としました。

下記に記載していますが、Zabbix Serverのプロセス停止時には正常にリソースが移動することを利用することとしました。

プロセスを停止した場合(Zabbix Server)(CentOS Stream 8)

参考URL:HAクラスタをDRBDとPacemakerで作ってみよう [Pacemaker編]

1回でもfail-countがカウントされるとフェールオーバーするように設定していない場合、migration-thresholdのデフォルト値(migration-threshold=1000000)を変更してフェールオーバーするようにします。

Zabbix Serverを停止します。

エラーをクリーンアップし、フェールオーバーさせます。

-

Pcs:Pacemaker/Corosync構成ツール

コマンドラインインターフェースによるpacemaker/corosyncの制御や設定が可能です。

Pacemakerのクラスタについて、作成・表示・変更などを設定できます。 -

Pacemaker:リソース制御機能

-

アプリケーション監視・制御機能

Apache, nginx, Tomcat, JBoss, PostgreSQL, Oracle, MySQL, ファイルシステム制御、仮想IPアドレス制御、等、多数のリソースエージェント(RA)を同梱しています。

また、RAを自作すればどんなアプリケーションでも監視可能です。 -

ネットワーク監視・制御機能

定期的に指定された宛先へpingを送信することでネットワーク接続の正常性を監視できます。 -

ノード監視機能

定期的に互いにハートビート通信を行いノード監視をします。

また、STONITH機能により通信不可となったノードの電源を強制的に停止し、両系稼働状態(スプリットブレイン)を回避できます。 -

自己監視機能

Pacemaker関連プロセスの停止時は影響度合いに応じ適宜、プロセス再起動、またはフェイルオーバを実施します。

また、watchdog機能を併用し、メインプロセス停止時は自動的にOS再起動(およびフェイルオーバ)を実行します。 -

ディスク監視・制御機能

指定されたディスクの読み込みを定期的に実施し、ディスクアクセスの正常性を監視します。

-

アプリケーション監視・制御機能

- Corosync:クラスタ制御機能

-

Stonith:排他制御機能

制御が利かなくなったノードの電源を強制的に停止してクラスタから「強制的に離脱(Fencing)」させる機能のことです。

-

フェンシング(電源断)制御

ipmi(IPMIデバイス用)

libvirt(KVM,Xen等仮想マシン制御用)

ec2(AmazonEC2用)

等

-

サーバ生死確認、相撃ち防止

stonith-helper

-

フェンシング(電源断)制御

●VMware ESXi 6.7上でCentOS 7 + Pacemaker + Corosyncの設定に挑戦

参考URL:CentOS 7 + Pacemaker + CorosyncでMariaDBをクラスタ化する① (準備・インストール編)

参考URL:Pacemakerの概要

参考URL:VMware ESXiに仮想共有ディスクファイルを作成する

参考URL:

参考URL:【ざっくり概要】Linuxファイルシステムの種類や作成方法まとめ!

ノードの準備

ノード(centos7-1、centos7-2)を準備します。

今回はCentOS 7のカーネルバージョン3.10.0-957.21.3.el7.x86_64でノードを構築します。

VMware Host Clientにログインし、インターコネクト用のネットワーク(名称:Interconnect)を作成します。

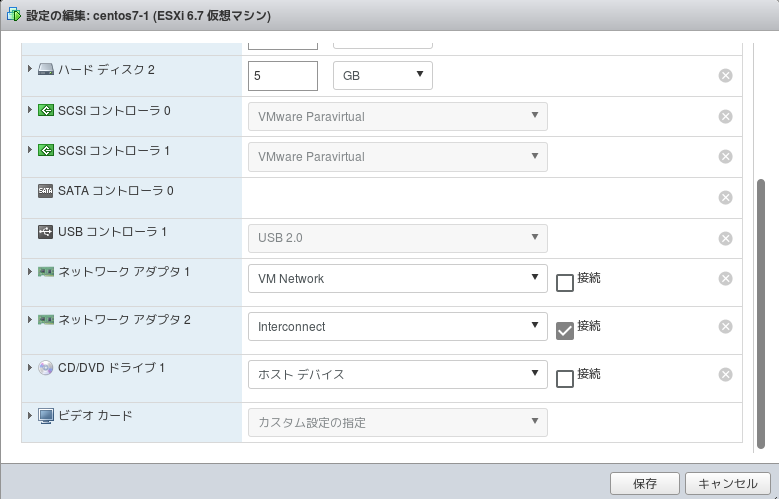

各ノードでインターコネクト用のNIC(ネットワークアダプタ2)を作成します。

このNICにはPacemakerで使用するインターコネクト用のIPアドレスを割り当てます(例:centos7-1:192.168.0.11、centos7-2:192.168.0.12)。

SELinux及びFirewalldについて操作が不慣れな場合、ノードのSELinux及びFirewalldは無効にしておくことをお薦めします。

共有ディスクの作成

共有ストレージをベースとした仮想ディスクは、VMFSデータストア上でEagerZeroedThickオプションを使用して作成する必要があります。

これらの操作は、コンソール上で、vmkfstoolsコマンドを実行する方法とユーザインターフェースとしてVMware Host Clientを使用する方法があります。

ここでは下記の条件で仮想共有ディスクファイルを作成します。

-

仮想共有ディスクのvmdkファイルを置く場所

/vmfs/volumes/datastore2/com_datastore -

仮想共有ディスクファイル名

com1.vmdk -

仮想共有ディスクの容量

5GB

コンソールより仮想共有ディスクファイルを作成方法

コンソールより仮想共有ディスクファイルを作成するには、下記のようにします。

# ssh vmware password: [root@vmware:~] mkdir /vmfs/volumes/datastore2/com_datastore [root@vmware:~] vmkfstools -d eagerzeroedthick -c 5G /vmfs/volumes/datastore2/com_datastore/com1.vmdkこちらの方法では、VMware Host Client上の設定で共有ディスクの作成時にzeroedthickと認識されてしまい、2つのノードから共有させることができませんでした(原因不明)。

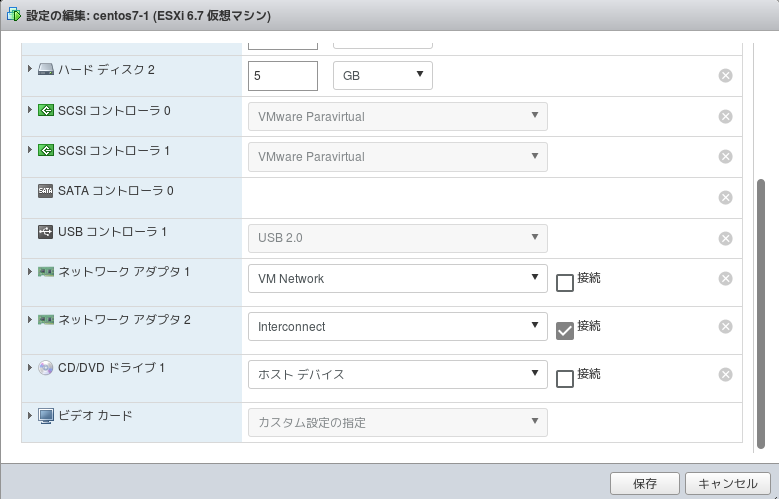

VMware Host Clientによる仮想共有ディスクファイルの作成方法

下記は同じ(一つの)仮想ディスクを2台の仮想ホストによりSCSIで共有させる(いずれかの仮想ホストからのみマウント)時に実施した方法です。

DRBDを構築する場合、各仮想ホストで同サイズの仮想ディスクを作成し、各仮想ホストに接続する必要がありますので、各仮想ホストで仮想ディスクを新規作成してください。

事前にディレクトリ下記のディレクトリを作成します。

# ssh vmware password: [root@vmware:~] mkdir /vmfs/volumes/datastore2/com_datastoreVMware Host Clientからログインし、共有ディスク作成します。 (上記のコマンドによる作成方法では、eagerzeroedthickタイプで認識されませんでした。) 1つ目のノード(centos7-1)で共有ディスクを作成します。

-

新たにSCSIコントローラを作成します。

SCSIバスの共有:仮想

※同一のESXiに存在するノード間でディスクを共有したい場合は「仮想」を選択し、複数のESXiをまたいでディスクを共有したい場合は「物理」を選択する。

-

ハードディスクを追加します。

「ハードディスクの追加」 - 「新規標準ハードディスク」

「新規ハードディスク」の右側の▶をクリックし展開します。

以下は設定した箇所

容量:5GB

場所:[datastore2] com_datastore/com1.vmdk

ディスク プロビジョニング:シック プロビジョニング (Eager Zeroed)

シェア:標準

制限-IOPs:制限なし

コントローラの場所:SCSIコントローラ1 SCSI (1:0)

ディスクモード:独立型:通常

共有:マルチライターの共有

右下の「保存」をクリックすると共有ディスクが作成されます。

2つ目のノード(centos7-2)で上記で作成した共有ディスクを利用してSCSIコントローラ等を作成します。

-

新たにSCSIコントローラを作成します。

SCSIバスの共有:仮想

-

ハードディスクを追加します。

「ハードディスクの追加」 - 「既存のハードディスク」で「[datastore2] com_datastore/com1.vmdk」を選択します。

タイプが「シック プロビジョニング (Lazy Zeroed)」になっていても、この状態のまま一度保存します。

再度、該当VMの設定を開きます。

すると、タイプが「シック プロビジョニング (Eager Zeroed) 」として表示されるはずです。

以下を設定します。

シェア:標準

制限-IOPs:制限なし

コントローラの場所:SCSIコントローラ1 SCSI (1:0)

ディスクモード:独立型:通常

共有:マルチライターの共有

右下の「保存」をクリックします。

起動後、各ノード(centos7-1、centos7-2)からディスクのデバイスを見ることができるか確認します。

■centos7-1で確認 [root@centos7-1 ~]# fdisk -l /dev/sdb Disk /dev/sdb: 5368 MB, 5368709120 bytes, 10485760 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O サイズ (最小 / 推奨): 512 バイト / 512 バイト ■centos7-2で確認 [root@centos7-2 ~]# fdisk -l /dev/sdb Disk /dev/sdb: 5368 MB, 5368709120 bytes, 10485760 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O サイズ (最小 / 推奨): 512 バイト / 512 バイト

パーティションの設定

デバイスが認識されましたので、ファイルシステムの作成及びパーティションを設定します。

作成したパーティションをフォーマットします。デバイスを間違えないよう注意してください。[root@centos7-1 ~]# fdisk /dev/sdb Welcome to fdisk (util-linux 2.23.2). Changes will remain in memory only, until you decide to write them. Be careful before using the write command. Device does not contain a recognized partition table Building a new DOS disklabel with disk identifier 0x631b680e. コマンド (m でヘルプ): m コマンドの動作 a toggle a bootable flag b edit bsd disklabel c toggle the dos compatibility flag d delete a partition g create a new empty GPT partition table G create an IRIX (SGI) partition table l list known partition types m print this menu n add a new partition o create a new empty DOS partition table p print the partition table q quit without saving changes s create a new empty Sun disklabel t change a partition's system id u change display/entry units v verify the partition table w write table to disk and exit x extra functionality (experts only) 現時点でのパーティション情報を表示させます。 コマンド (m でヘルプ): p Disk /dev/sdb: 5368 MB, 5368709120 bytes, 10485760 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O サイズ (最小 / 推奨): 512 バイト / 512 バイト Disk label type: dos ディスク識別子: 0x631b680e デバイス ブート 始点 終点 ブロック Id システム パーティションの設定作業未実施のため、何も表示されません。 パーティションを設定します。 コマンド (m でヘルプ): n Partition type: p primary (0 primary, 0 extended, 4 free) e extended プライマリパーティションとして作成します。 サイズは全領域を指定します。 Select (default p): p パーティション番号 (1-4, default 1): (リターン) 最初 sector (2048-10485759, 初期値 2048): (リターン) 初期値 2048 を使います Last sector, +sectors or +size{K,M,G} (2048-10485759, 初期値 10485759): (リターン) 初期値 10485759 を使います Partition 1 of type Linux and of size 5 GiB is set ファイルシステムが作成されているか確認します。 コマンド (m でヘルプ): p Disk /dev/sdb: 5368 MB, 5368709120 bytes, 10485760 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O サイズ (最小 / 推奨): 512 バイト / 512 バイト Disk label type: dos ディスク識別子: 0x631b680e デバイス ブート 始点 終点 ブロック Id システム /dev/sdb1 2048 10485759 5241856 83 Linux コマンド (m でヘルプ): w パーティションテーブルは変更されました!

下記コマンドでマウントします。[root@centos7-1 ~]# mkfs.ext4 /dev/sdb1 mke2fs 1.42.9 (28-Dec-2013) Filesystem label= OS type: Linux Block size=4096 (log=2) Fragment size=4096 (log=2) Stride=0 blocks, Stripe width=0 blocks 327680 inodes, 1310464 blocks 65523 blocks (5.00%) reserved for the super user First data block=0 Maximum filesystem blocks=1342177280 40 block groups 32768 blocks per group, 32768 fragments per group 8192 inodes per group Superblock backups stored on blocks: 32768, 98304, 163840, 229376, 294912, 819200, 884736 Allocating group tables: done Writing inode tables: done Creating journal (32768 blocks): done Writing superblocks and filesystem accounting information: done

[root@centos7-1 ~]# mount /dev/sdb1 /mnt

●Pacemaker及びCorosyncのインストール・初期設定(CentOS Stream 8)

参考URL:CentOS 8 + PacemakerでSquidとUnboundを冗長化する

参考URL:CentOS 8.1 (1911)でPacemaker / Corosyncが利用可能に

CentOS 7の場合は「 Pacemaker及びCorosyncのインストール・初期設定 」以降を参照してください。

CentOS 8からクラスタソフトのリポジトリが変更されたようです。

pacemaker及びpcsをインストールします。# dnf repolist all repo id repo の名前 状態 appstream CentOS Stream 8 - AppStream 有効化 baseos CentOS Stream 8 - BaseOS 有効化 debuginfo CentOS Stream 8 - Debuginfo 無効化 epel Extra Packages for Enterprise Linux 8 - x86_64 無効化 epel-debuginfo Extra Packages for Enterprise Linux 8 - x86_64 - Debug 無効化 epel-modular Extra Packages for Enterprise Linux Modular 8 - x86_64 有効化 epel-modular-debuginfo Extra Packages for Enterprise Linux Modular 8 - x86_64 - Debug 無効化 epel-modular-source Extra Packages for Enterprise Linux Modular 8 - x86_64 - Source 無効化 epel-playground Extra Packages for Enterprise Linux 8 - Playground - x86_64 無効化 epel-playground-debuginfo Extra Packages for Enterprise Linux 8 - Playground - x86_64 - Debug 無効化 epel-playground-source Extra Packages for Enterprise Linux 8 - Playground - x86_64 - Source 無効化 epel-source Extra Packages for Enterprise Linux 8 - x86_64 - Source 無効化 epel-testing Extra Packages for Enterprise Linux 8 - Testing - x86_64 無効化 epel-testing-debuginfo Extra Packages for Enterprise Linux 8 - Testing - x86_64 - Debug 無効化 epel-testing-modular Extra Packages for Enterprise Linux Modular 8 - Testing - x86_64 無効化 epel-testing-modular-debuginfo Extra Packages for Enterprise Linux Modular 8 - Testing - x86_64 - Debug 無効化 epel-testing-modular-source Extra Packages for Enterprise Linux Modular 8 - Testing - x86_64 - Source 無効化 epel-testing-source Extra Packages for Enterprise Linux 8 - Testing - x86_64 - Source 無効化 extras CentOS Stream 8 - Extras 有効化 ha CentOS Stream 8 - HighAvailability 無効化 media-appstream CentOS Stream 8 - Media - AppStream 無効化 media-baseos CentOS Stream 8 - Media - BaseOS 無効化 powertools CentOS Stream 8 - PowerTools 無効化 rt CentOS Stream 8 - RealTime 無効化

※両ノードで実施 # dnf --enablerepo=ha install -y pacemaker pcs fence-agents-all/etc/hostsを設定します。

クラスタ用ユーザを設定する。yumでインストールすると、「hacluster」ユーザが作成されているはずなので確認します。

※両ノードで実施 [root@centos8-1 ~]# cat /etc/passwd | grep hacluster hacluster:x:189:189:cluster user:/home/hacluster:/sbin/nologin [root@centos8-2 ~]# cat /etc/passwd | grep hacluster hacluster:x:189:189:cluster user:/home/hacluster:/sbin/nologinパスワードを設定します。両ノードで同一のパスワードです。

※両ノードで実施 # passwd hacluster ユーザ hacluster のパスワードを変更。 新しいパスワード: 新しいパスワードを再入力してください: passwd: すべての認証トークンが正しく更新できました。

# vi /etc/hosts 10.0.0.41 centos8-str1 10.0.0.42 centos8-str2 192.168.0.41 centos8-str1c 192.168.0.42 centos8-str2cクラスタサービスを起動します。

# systemctl enable --now pcsd or # systemctl start pcsd.service # systemctl enable pcsd.service以上でインストール作業は完了です。

クラスタサービスを利用できるようファイアーウォールを設定します。

※両ノードで実施 # firewall-cmd --add-service=high-availability --permanent success # firewall-cmd --reload success以降、クラスタの初期設定を行います。

※片方のノードで実施

[root@centos8-str1 ~]# pcs host auth centos8-str1 centos8-str2 Username: hacluster Password: centos8-str2: Authorized centos8-str1: Authorized

Pacemaker・Corosyncのクラスタを作成(CentOS Stream 8)

172.17.0/24側に仮想IPを設定するため、下記のように実行しました。

[root@centos8-str1 ~]# pcs cluster setup --start bigbang centos8-str1 addr=10.0.0.41 addr=192.168.0.41 \

centos8-str2 addr=10.0.0.42 addr=192.168.0.42

Destroying cluster on hosts: 'centos8-str1', 'centos8-str2'...

centos8-str1: Successfully destroyed cluster

centos8-str2: Successfully destroyed cluster

Requesting remove 'pcsd settings' from 'centos8-str1', 'centos8-str2'

centos8-str1: successful removal of the file 'pcsd settings'

centos8-str2: successful removal of the file 'pcsd settings'

Sending 'corosync authkey', 'pacemaker authkey' to 'centos8-str1', 'centos8-str2'

centos8-str1: successful distribution of the file 'corosync authkey'

centos8-str1: successful distribution of the file 'pacemaker authkey'

centos8-str2: successful distribution of the file 'corosync authkey'

centos8-str2: successful distribution of the file 'pacemaker authkey'

Sending 'corosync.conf' to 'centos8-str1', 'centos8-str2'

centos8-str1: successful distribution of the file 'corosync.conf'

centos8-str2: successful distribution of the file 'corosync.conf'

Cluster has been successfully set up.

Starting cluster on hosts: 'centos8-str1', 'centos8-str2'...

クラスタの初期状態を確認します。

設定したホスト名でクラスタが2台ともオンラインとなっています。[root@centos8-str1 ~]# pcs status Cluster name: bigbang WARNINGS: No stonith devices and stonith-enabled is not false Cluster Summary: * Stack: corosync * Current DC: centos8-str2 (version 2.0.5-8.el8-ba59be7122) - partition with quorum * Last updated: Fri Mar 5 14:33:04 2021 * Last change: Fri Mar 5 14:32:07 2021 by hacluster via crmd on centos8-str2 * 2 nodes configured * 0 resource instances configured Node List: * Online: [ centos8-str1 centos8-str2 ] Full List of Resources: * No resources Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled

クラスタの管理通信の確認は以下コマンドで実施します。2つのリンクが設定されていることがわかります。

[root@centos8-str1 ~]# corosync-cfgtool -s Printing link status. Local node ID 1 LINK ID 0 addr = 10.0.0.41 status: nodeid 1: localhost nodeid 2: connected LINK ID 1 addr = 192.168.0.41 status: nodeid 1: localhost nodeid 2: connected

クラスタのプロパティを設定(CentOS Stream 8)

参考URL:Pacemaker/Corosync の設定値について

-

no-quorum-policy: クラスタがquorumを持っていないときの動作を定義します。デフォルトはstop(全リソース停止)です。

2台構成の場合は、片方が停止しても動作を続行できるよう、ignore(全リソースはそのまま動作を続行)を指定します。 -

stonith-enabled: フェンシング(STONITH)を有効にするかどうかを指定します。デフォルトはtrue(有効)です。

これが有効、かつSTONITHリソースが未設定の場合リソースが起動できません。 -

cluster-recheck-interval: クラスタのチェック間隔を指定します。デフォルトは15分です。

failure-timeout(failcountを自動クリアするまでの時間)など、時間ベースの動作が反映されるまでの時間に影響します。

検証目的ですので、STONITHは無効化します。クラスタの設定として、STONITHの無効化を行い、no-quorum-policyをignoreに設定します。

本来、スプリットブレイン発生時の対策として、STONITHは有効化するべきです。

no-quorum-policyについて、通常クォーラムは多数決の原理でアクティブなノードを決定する仕組みですが、2台構成のクラスタの場合は多数決による決定ができません。

この場合、クォーラム設定は「ignore」に設定するのがセオリーのようです。

この時、スプリットブレインが発生したとしても、各ノードのリソースは特に何も制御されないという設定となるため、STONITHによって片方のノードを強制的に電源を落として対応することになります。

[root@centos8-str1 ~]# pcs property Cluster Properties: cluster-infrastructure: corosync cluster-name: bigbang dc-version: 2.0.5-8.el8-ba59be7122 have-watchdog: false # pcs property set stonith-enabled=false # pcs property set no-quorum-policy=ignore # pcs property Cluster Properties: cluster-infrastructure: corosync cluster-name: bigbang dc-version: 2.0.5-8.el8-ba59be7122 have-watchdog: false no-quorum-policy: ignore stonith-enabled: false自動フェールバックを無効とします(Warningは無視します)。

# pcs resource defaults resource-stickiness=INFINITY Warning: This command is deprecated and will be removed. Please use 'pcs resource defaults update' instead. Warning: Defaults do not apply to resources which override them with their own defined values

リソースエージェントを使ってリソースを構成する(CentOS Stream 8)

参考URL:動かして理解するPacemaker ~CRM設定編~ その2

リソースを制御するために、リソースエージェントを利用します。リソースエージェントとは、クラスタソフトで用意されているリソースの起動・監視・停止を制御するためのスクリプトとなります。

今回の構成では、以下のリソースエージェントを利用します。

- ocf:heartbeat:IPaddr2 : 仮想IPアドレスを制御

- ocf:heartbeat:Filesystem : ファイルシステムのマウントを制御

- systemd:mariadb : MariaDBを制御

# pcs resource describe <リソースエージェント>まず、仮想IPアドレスの設定を行います。付与するIPアドレス・サブネットマスク・NICを指定します。また、interval=30sとして監視間隔を30秒に変更します。

※片方のノードで実施 [root@centos8-str1 ~]# pcs resource create VirtualIP ocf:heartbeat:IPaddr2 ip=10.0.0.140 cidr_netmask=24 nic=ens192 op monitor interval=30sVIP resource を定義 (IPaddr2 は Linux 向けの VIP 設定、場所は /usr/lib/ocf/resource.d/heartbeat/IPaddr2)

- IPaddr

manages virtual IPv4 addresses (portable version) - IPaddr2

manages virtual IPv4 addresses (Linux specific version)

※片方のノードで実施 [root@centos8-str1 ~]# pcs resource create ShareDir ocf:heartbeat:Filesystem device=/dev/sdb1 directory=/mnt fstype=ext4最後にMariaDBの設定です。こちらはシンプルに以下コマンドで設定するだけです。

※片方のノードで実施 [root@centos8-str1 ~]# pcs resource create MariaDB systemd:mariadb上記3つのリソースは起動・停止の順番を考慮する必要があります。

起動:「仮想IPアドレス」→「ファイルシステム」→「MariaDB」

停止:「MariaDB」→「ファイルシステム」→「仮想IPアドレス」

この順序を制御するために、リソースの順序を付けてグループ化した「リソースグループ」を作成します。

※片方のノードで実施 [root@centos8-str1 ~]# pcs resource group add rg01 VirtualIP ShareDir MariaDB以上でリソース設定が完了となりますので、クラスタの設定を確認します。

リソース設定の詳細内容を確認したい場合、下記コマンドを利用します。※片方のノードで実施 [root@centos8-str1 ~]# pcs status Cluster name: bigbang Cluster Summary: * Stack: corosync * Current DC: centos8-str2 (version 2.0.5-8.el8-ba59be7122) - partition with quorum * Last updated: Fri Mar 5 14:38:16 2021 * Last change: Fri Mar 5 14:38:07 2021 by root via cibadmin on centos8-str1 * 2 nodes configured * 3 resource instances configured Node List: * Online: [ centos8-str1 centos8-str2 ] Full List of Resources: * Resource Group: rg01: * VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str1 * ShareDir (ocf::heartbeat:Filesystem): Started centos8-str1 * MariaDB (systemd:mariadb): Started centos8-str1 Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled

問題なく設定されています。[root@centos8-str1 ~]# pcs resource config Group: rg01 Resource: VirtualIP (class=ocf provider=heartbeat type=IPaddr2) Attributes: cidr_netmask=24 ip=10.0.0.140 nic=ens192 Operations: monitor interval=30s (VirtualIP-monitor-interval-30s) start interval=0s timeout=20s (VirtualIP-start-interval-0s) stop interval=0s timeout=20s (VirtualIP-stop-interval-0s) Resource: ShareDir (class=ocf provider=heartbeat type=Filesystem) Attributes: device=/dev/sdb1 directory=/mnt fstype=ext4 Operations: monitor interval=20s timeout=40s (ShareDir-monitor-interval-20s) start interval=0s timeout=60s (ShareDir-start-interval-0s) stop interval=0s timeout=60s (ShareDir-stop-interval-0s) Resource: MariaDB (class=systemd type=mariadb) Operations: monitor interval=60 timeout=100 (MariaDB-monitor-interval-60) start interval=0s timeout=100 (MariaDB-start-interval-0s) stop interval=0s timeout=100 (MariaDB-stop-interval-0s)

クラスタの起動(CentOS Stream 8)

クラスタを起動します。

[root@centos8-str1 ~]# pcs cluster start --all centos8-str1: Starting Cluster... centos8-str2: Starting Cluster...起動後のステータスを確認します。

[root@centos8-str1 ~]# pcs status Cluster name: bigbang Cluster Summary: * Stack: corosync * Current DC: centos8-str2 (version 2.0.5-8.el8-ba59be7122) - partition with quorum * Last updated: Fri Mar 5 14:45:03 2021 * Last change: Fri Mar 5 14:38:07 2021 by root via cibadmin on centos8-str1 * 2 nodes configured * 3 resource instances configured Node List: * Online: [ centos8-str1 centos8-str2 ] Full List of Resources: * Resource Group: rg01: * VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str1 * ShareDir (ocf::heartbeat:Filesystem): Started centos8-str1 * MariaDB (systemd:mariadb): Started centos8-str1 Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled

手動フェールオーバーその1(CentOS Stream 8)

手動でフェールオーバーさせるために、わざわざサーバーを再起動させるのは面倒なので、コマンドでリソースグループをフェールオーバーさせます。

[root@centos8-str1 ~]# pcs resource status

* Resource Group: rg01:

* VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str1

* ShareDir (ocf::heartbeat:Filesystem): Started centos8-str1

* MariaDB (systemd:mariadb): Started centos8-str1

コマンドでリソースグループrg01をノードcentos8-str2に移動させます。

[root@centos8-str1 ~]# pcs resource move rg01 centos8-str2

[root@centos8-str1 ~]# pcs resource move rg01

[root@centos8-str1 ~]# pcs resource clear rg01

リソースグループがcentos8-str2に移動していることが分かります。

[root@centos8-str1 ~]# pcs resource status

* Resource Group: rg01:

* VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str2

* ShareDir (ocf::heartbeat:Filesystem): Started centos8-str2

* MariaDB (systemd:mariadb): Started centos8-str2

リソースグループをフェイルバックさせます。

[[root@centos8-str1 ~]# pcs resource move rg01 centos8-str1

[root@centos8-str1 ~]# pcs resource move rg01

[root@centos8-str1 ~]# pcs resource clear rg01

元の状態に戻っていることが分かります。

[root@centos8-str1 ~]# pcs resource status

* Resource Group: rg01:

* VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str1

* ShareDir (ocf::heartbeat:Filesystem): Started centos8-str1

* MariaDB (systemd:mariadb): Started centos8-str1

手動フェールオーバーその2(CentOS Stream 8)

コマンドでフェールオーバーさせる手順以外にも、ノードをスタンバイにすることで強制的にリソースグループを移動させることもできます。

現在リソースグループが起動しているcentos8-str1をスタンバイにします。[root@centos8-str1 ~]# pcs status Cluster name: bigbang Cluster Summary: * Stack: corosync * Current DC: centos8-str2 (version 2.0.5-8.el8-ba59be7122) - partition with quorum * Last updated: Fri Mar 5 14:48:22 2021 * Last change: Fri Mar 5 14:47:55 2021 by root via crm_resource on centos8-str1 * 2 nodes configured * 3 resource instances configured Node List: * Online: [ centos8-str1 centos8-str2 ] Full List of Resources: * Resource Group: rg01: * VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str1 * ShareDir (ocf::heartbeat:Filesystem): Started centos8-str1 * MariaDB (systemd:mariadb): Started centos8-str1 Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled

[root@centos8-str1 ~]# pcs node standby centos8-str1リソースグループrg01が移動していることが分かります。

リソースグループrg01をフェイルバックさせるには下記のようにします。[root@centos8-str1 ~]# pcs status Cluster name: bigbang Cluster Summary: * Stack: corosync * Current DC: centos8-str2 (version 2.0.5-8.el8-ba59be7122) - partition with quorum * Last updated: Fri Mar 5 14:49:16 2021 * Last change: Fri Mar 5 14:49:04 2021 by root via cibadmin on centos8-str1 * 2 nodes configured * 3 resource instances configured Node List: * Node centos8-str1: standby * Online: [ centos8-str2 ] Full List of Resources: * Resource Group: rg01: * VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str2 * ShareDir (ocf::heartbeat:Filesystem): Started centos8-str2 * MariaDB (systemd:mariadb): Started centos8-str2 Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled

[root@centos8-str1 ~]# pcs node unstandby centos8-str1 [root@centos8-str1 ~]# pcs node standby centos8-str2 [root@centos8-str1 ~]# pcs node unstandby centos8-str2元に戻っていることが確認できます。

[root@centos8-str1 ~]# pcs status Cluster name: bigbang Cluster Summary: * Stack: corosync * Current DC: centos8-str2 (version 2.0.5-8.el8-ba59be7122) - partition with quorum * Last updated: Fri Mar 5 14:50:19 2021 * Last change: Fri Mar 5 14:50:14 2021 by root via cibadmin on centos8-str1 * 2 nodes configured * 3 resource instances configured Node List: * Online: [ centos8-str1 centos8-str2 ] Full List of Resources: * Resource Group: rg01: * VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str1 * ShareDir (ocf::heartbeat:Filesystem): Started centos8-str1 * MariaDB (systemd:mariadb): Started centos8-str1 Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled

サーバーダウン障害(CentOS Stream 8)

VMware Host Clientにて、仮想マシンの再起動を実施します。

[root@centos8-str1 ~]# shutdown -r now Connection to centos8-str1 closed by remote host. Connection to centos8-str1 closed.

リソースグループrg01が移動していることが分かります。

障害になったノードは起動後、クラスタに組み込まれていない状態となります。[root@centos8-str2 ~]# pcs status Cluster name: bigbang Cluster Summary: * Stack: corosync * Current DC: centos8-str2 (version 2.0.5-8.el8-ba59be7122) - partition with quorum * Last updated: Fri Mar 5 14:56:47 2021 * Last change: Fri Mar 5 14:50:14 2021 by root via cibadmin on centos8-str1 * 2 nodes configured * 3 resource instances configured Node List: * Online: [ centos8-str2 ] * OFFLINE: [ centos8-str1 ] Full List of Resources: * Resource Group: rg01: * VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str2 * ShareDir (ocf::heartbeat:Filesystem): Started centos8-str2 * MariaDB (systemd:mariadb): Started centos8-str2 Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled

このままではクラスタとして稼働しませんので、元の状態にするためにクラスタに組み込みます。

[root@centos8-str2 ~]# pcs cluster start centos8-str1 centos8-str1: Starting Cluster...状態を確認すると、元の状態に戻っていることが分かります。

[root@centos8-str2 ~]# pcs statusCluster name: bigbang Cluster Summary: * Stack: corosync * Current DC: centos8-str2 (version 2.0.5-8.el8-ba59be7122) - partition with quorum * Last updated: Fri Mar 5 14:57:50 2021 * Last change: Fri Mar 5 14:50:14 2021 by root via cibadmin on centos8-str1 * 2 nodes configured * 3 resource instances configured Node List: * Online: [ centos8-str1 centos8-str2 ] Full List of Resources: * Resource Group: rg01: * VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str1 * ShareDir (ocf::heartbeat:Filesystem): Started centos8-str1 * MariaDB (systemd:mariadb): Started centos8-str1 Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled

NIC障害(CentOS Stream 8)

参考URL:6.6. リソースの動作

VIPの監視にon-fail=standbyのオプションを指定しないとうまく切り替わらないので、あらかじめ設定しておきます。

疑似NIC障害を起こすため、仮想マシンのNICを切断(チェックを外します。上手く動作しない場合、完全に削除します。)し保存します。[root@centos8-str1 ~]# pcs resource update VirtualIP op monitor on-fail=standby [root@centos8-str1 ~]# pcs resource config Group: rg01 Resource: VirtualIP (class=ocf provider=heartbeat type=IPaddr2) Attributes: cidr_netmask=24 ip=10.0.0.140 nic=ens192 Operations: monitor interval=60s on-fail=standby (VirtualIP-monitor-interval-60s) start interval=0s timeout=20s (VirtualIP-start-interval-0s) stop interval=0s timeout=20s (VirtualIP-stop-interval-0s) Resource: ShareDir (class=ocf provider=heartbeat type=Filesystem) Attributes: device=/dev/sdb1 directory=/mnt fstype=ext4 Operations: monitor interval=20s timeout=40s (ShareDir-monitor-interval-20s) start interval=0s timeout=60s (ShareDir-start-interval-0s) stop interval=0s timeout=60s (ShareDir-stop-interval-0s) Resource: MariaDB (class=systemd type=mariadb) Operations: monitor interval=60 timeout=100 (MariaDB-monitor-interval-60) start interval=0s timeout=100 (MariaDB-start-interval-0s) stop interval=0s timeout=100 (MariaDB-stop-interval-0s)

切断するNIC(チェックを外します。上手く動作しない場合、完全に削除します。)は仮想IPアドレスが割り当てられるネットワークに属するNICアダプタです。

1分程度でリソースVirtualIPがFAILEDステータスになり、リソースグループがフェールオーバーされます。

削除したネットワークアダプタを復活させます。[root@centos8-str2 ~]# pcs status Cluster name: bigbang Cluster Summary: * Stack: corosync * Current DC: centos8-str2 (version 2.0.5-8.el8-ba59be7122) - partition with quorum * Last updated: Fri Mar 5 15:05:45 2021 * Last change: Fri Mar 5 14:58:41 2021 by root via cibadmin on centos8-str2 * 2 nodes configured * 3 resource instances configured Node List: * Node centos8-str1: standby (on-fail) * Online: [ centos8-str2 ] Full List of Resources: * Resource Group: rg01: * VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str2 * ShareDir (ocf::heartbeat:Filesystem): Started centos8-str2 * MariaDB (systemd:mariadb): Starting centos8-str2 Failed Resource Actions: * VirtualIP_monitor_60000 on centos8-str1 'not running' (7): call=20, status='complete', exitreason='', \ last-rc-change='2021-03-05 15:05:42 +09:00', queued=0ms, exec=0ms Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled

その後、障害ノードを復旧させ、クラスタに組み込むために以下コマンドを実行します。

上記コマンドでクラスタを復旧させると、リソースグループが元のノードにフェイルバックするので注意が必要です。[root@centos8-str1 ~]# pcs resource cleanup Cleaned up all resources on all nodes Waiting for 1 reply from the controller ... got reply (done) [root@centos8-str1 ~]# pcs status Cluster name: bigbang Cluster Summary: * Stack: corosync * Current DC: centos8-str2 (version 2.0.5-8.el8-ba59be7122) - partition with quorum * Last updated: Fri Mar 5 15:10:57 2021 * Last change: Fri Mar 5 15:10:36 2021 by hacluster via crmd on centos8-str1 * 2 nodes configured * 3 resource instances configured Node List: * Online: [ centos8-str1 centos8-str2 ] Full List of Resources: * Resource Group: rg01: * VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str1 * ShareDir (ocf::heartbeat:Filesystem): Started centos8-str1 * MariaDB (systemd:mariadb): Started centos8-str1 Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled

どうやらもともと稼働していたノードのスコアがINFINITYのままになるため、フェイルバックするようです。

この辺りをきちんと制御する意味でも、STONITHの設定を有効にし、障害が発生したノードは強制停止する設定をした方がよいかもしれません。

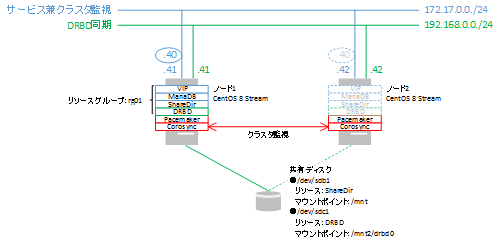

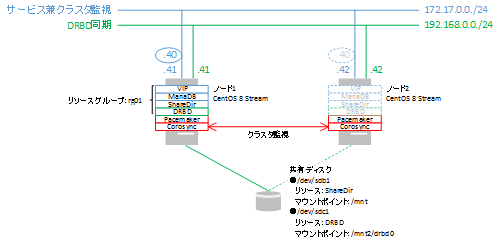

●PacemakerでZabbix-Serverを制御する(DRBD利用)(CentOS Stream 8)

参考URL:Pacemaker/Corosync の設定値について

参考URL:CentOS8でZabbix5をインストールし、Zabbix Agentを監視する

参考URL:HAクラスタをDRBDとPacemakerで作ってみよう [Pacemaker編]

※この方法でPacemakerを構成した場合、シャットダウンする前に下記を実施してください。

1.スタンバイ側のクラスタ機能の停止

2.Zabbixサーバの停止

3.MariaDBの停止

4.シャットダウンの実施

または

1.Pacemakerの停止

2.シャットダウンの実施

必要なソフトをインストールします(インストール済みの場合、該当作業は不要です)。

# dnf install httpd php php-fpm php-mbstring # vi /etc/php.ini date.timezone ="Asia/Tokyo" # dnf install httpdZabbix用のデータベースにMariaDBを利用します。

Paeemakerで制御されるZabbixで使用するMariaDBのデータベースディレクトリをDRBDを使用してマウントされているディレクトリ上に保存しています。

このDRBDもPacemakerにより制御されます。

DRBDの構築方法については「DRBDの構築について」を参照してください。

MariaDBのデータベースディレクトリの変更方法については「MariaDB(MySQL)のデータベースのフォルダを変更するには(pacemaker、DRBD対応)」を参照してください。

特に記載がない場合、2ホストとも同じ作業を行います。

特定のホストでのみ行う作業は、プロンプトにホスト名を記載します。

DRBDは設定済みとします。

DRBDは自動フェールバックを無効としておかないとDRDBでスプリットブレインが発生し、2台ともStandAlone状態となってしまう場合があります。

この対策のため、自動フェールバックを無効とします(Warningは無視します)。

# pcs resource defaults resource-stickiness=INFINITY Warning: This command is deprecated and will be removed. Please use 'pcs resource defaults update' instead. Warning: Defaults do not apply to resources which override them with their own defined valuesクラスタのプロパティは下記を設定済みです。

# pcs property set stonith-enabled=false # pcs property set no-quorum-policy=ignoreSELinuxを無効化していない場合、下記のコマンドを実施し、SELinuxを無効化します。

# vi /etc/selinux/config SELINUX=disabledホストを再起動します。

ファイアーウォールを動作させている場合、各ソフトで使用するポート(http用ポート:80、Zabbix用ポート:10051)を開けてください。

MariaDBは「MariaDB(MySQL)のデータベースのフォルダを変更するには(pacemaker、DRBD対応)」により設定済みとします。

必要なソフトをインストールします(インストール済みの場合、該当作業は不要です)。

# dnf install mariadb mariadb-server # rpm -Uvh https://repo.zabbix.com/zabbix/5.0/rhel/8/x86_64/zabbix-release-5.0-1.el8.noarch.rpm # rpm -Uvh https://repo.zabbix.com/zabbix/5.4/rhel/8/x86_64/zabbix-release-5.4-1.el8.noarch.rpm # dnf clean all # dnf install zabbix-server-mysql zabbix-web-mysql zabbix-apache-conf zabbix-agent # dnf install zabbix-server-mysql zabbix-web-service zabbix-sql-scripts zabbix-agent # dnf install zabbix-server-mysql zabbix-web-service zabbix-sql-scripts zabbix-agent zabbix-web-mysql zabbix-apache-conf zabbix-web-japanese zabbix-getcentos8-str1にてMariaDBが起動していることを確認し、Zabbix用のデータベースを作成します。

[root@centos8-str1 ~]# systemctl start mariadb [root@centos8-str1 ~]# systemctl status mariadb [root@centos8-str1 ~]# mysql_secure_installationNOTE: RUNNING ALL PARTS OF THIS SCRIPT IS RECOMMENDED FOR ALL MariaDB SERVERS IN PRODUCTION USE! PLEASE READ EACH STEP CAREFULLY! In order to log into MariaDB to secure it, we'll need the current password for the root user. If you've just installed MariaDB, and you haven't set the root password yet, the password will be blank, so you should just press enter here. Enter current password for root (enter for none): OK, successfully used password, moving on... Setting the root password ensures that nobody can log into the MariaDB root user without the proper authorisation. Set root password? [Y/n] Y New password: Re-enter new password: Password updated successfully! Reloading privilege tables.. ... Success! By default, a MariaDB installation has an anonymous user, allowing anyone to log into MariaDB without having to have a user account created for them. This is intended only for testing, and to make the installation go a bit smoother. You should remove them before moving into a production environment. Remove anonymous users? [Y/n] Y ... Success! Normally, root should only be allowed to connect from 'localhost'. This ensures that someone cannot guess at the root password from the network. Disallow root login remotely? [Y/n] Y ... Success! By default, MariaDB comes with a database named 'test' that anyone can access. This is also intended only for testing, and should be removed before moving into a production environment. Remove test database and access to it? [Y/n] Y - Dropping test database... ... Success! - Removing privileges on test database... ... Success! Reloading the privilege tables will ensure that all changes made so far will take effect immediately. Reload privilege tables now? [Y/n] Y ... Success! Cleaning up... All done! If you've completed all of the above steps, your MariaDB installation should now be secure. Thanks for using MariaDB!

Zabbixのデータベース設定(CentOS Stream 8)

MariaDBにログインする。

[root@centos8-str1 ~]# mysql -uroot -pzabbix-serverとPHPの設定を編集しておきます。centos8-str1とcentos8-str2の両ホストで編集しておく必要があります。Enter password: MariaDB [(none)]> create database zabbix character set utf8 collate utf8_bin; MariaDB [(none)]> create user zabbix@localhost identified by 'password'; MariaDB [(none)]> grant all privileges on zabbix.* to zabbix@localhost; MariaDB [(none)]> flush privileges; MariaDB [(none)]> quit [root@centos8-str1 ~]# zcat /usr/share/doc/zabbix-server-mysql*/create.sql.gz | mysql -uzabbix -p zabbix [root@centos8-str1 ~]# zcat /usr/share/doc/zabbix-sql-scripts/mysql/create.sql.gz | mysql -uzabbix zabbix -p Enter password: or [root@centos8-str1 ~]# zcat /usr/share/doc/zabbix-sql-scripts/mysql/create.sql.gz | mysql -uzabbix -p zabbix Enter password:

# vi /etc/zabbix/zabbix_server.conf DBSocket=/mnt2/drbd0/mysql/mysql.sock DBHost=localhost DBName=zabbix DBUser=zabbix DBPassword=yourpassword # vi /etc/zabbix/web/zabbix.conf.php $DB['SERVER'] = 'localhost'; ← 変更前 ↓ $DB['SERVER'] = '127.0.0.1'; ← 変更後 ※このファイルをscpで他方のホストにコピーする場合、所有者に注意してください。 [root@centos8-str2 ~]# ls -la /etc/zabbix/web/zabbix.conf.php -rw------- 1 apache apache 1482 3月 26 18:55 /etc/zabbix/web/zabbix.conf.php アクセス権がapacheでない場合、webフロントエンドで configuration file error permission denied と表示されてログインできなくなります。 # vi /etc/php-fpm.d/zabbix.conf php_value[date.timezone] = "Asia/Tokyo"

firewalldの設定

http,Zabbix Agentの通信を許可するために下記のコマンドを実行する。

# firewall-cmd --add-port=10051/tcp --permanent # firewall-cmd --add-service=http --permanent # systemctl restart firewalld

Zabbixサービス起動

centos8-str1でzabbix-server等を起動します。

[root@centos8-str1 ~]# systemctl restart zabbix-server zabbix-agent httpd php-fpmhttp://centos8-str1のIPアドレス/zabbix/ または http://仮想IPアドレス/zabbix/ へアクセスし、Zabbixのセットアップを進めてください。

Zabbix 5.4インストール時の初期設定画面はこちらです。

Next stepを押下します。

全ての項目が「OK」となっていることを確認してください。

/etc/zabbix/zabbix_server.confで設定した値を入力します。

portは0のままで構いません。

- DBHost=localhost

- DBName=zabbix

- DBUser=zabbix

- DBPassword=yourpassword

下記ウィンドウではデフォルトのまま次に進みます。

設定情報を確認し、問題なければ次に進みます。

無事設定が完了しました。

デフォルトのユーザ名とパスワードは下記のとおりです。

- ユーザ名:Admin

- パスワード:zabbix

セットアップ完了後にウィザードによって作成された /etc/zabbix/web/zabbix.conf.php は、centos8-str2にもコピーしておく必要があります。

[root@centos8-str1 ~]# scp /etc/zabbix/web/zabbix.conf.php centos8-str2:/etc/zabbix/web/zabbix.conf.php root@centos8-str2's password: # vi /etc/zabbix/zabbix_server.conf DBSocket=/mnt2/drbd0/mysql/mysql.sock DBHost=localhost DBName=zabbix DBUser=zabbix DBPassword=yourpassword # vi /etc/zabbix/web/zabbix.conf.php $DB['SERVER'] = 'localhost'; ← 変更前 ↓ $DB['SERVER'] = '127.0.0.1'; ← 変更後 ※このファイルをscpで他方のホストにコピーする場合、所有者に注意してください。 [root@centos8-str2 ~]# ls -la /etc/zabbix/web/zabbix.conf.php -rw------- 1 apache apache 1482 3月 26 18:55 /etc/zabbix/web/zabbix.conf.php アクセス権がapacheでない場合、webフロントエンドで configuration file error permission denied と表示されてログインできなくなります。 # vi /etc/php-fpm.d/zabbix.conf php_value[date.timezone] = "Asia/Tokyo"Zabbixのダッシュボードが表示されるところまでの動作を確認できたら、今後、httpd と zabbix-server はPacemakerで制御したいので、centos8-str1で一時的に起動していたリソースを停止します。

設定済みのリソースグループ rg01 に追加するので、クラスタ(pcsd)を停止する必要はありません。

[root@centos8-str1 ~]# systemctl stop httpd [root@centos8-str1 ~]# systemctl stop zabbix-server現在利用しているリソースグループ rg01 に httpd と zabbix-server を追加します。

[root@centos8-str1 ~]# pcs resource create httpd systemd:httpd --group rg01 [root@centos8-str1 ~]# pcs resource create zabbix-server systemd:zabbix-server --group rg01クラスタ状態を確認します。

[root@centos8-str1 ~]# pcs statusクラスタ設定状態を確認します。Cluster name: bigbang Cluster Summary: * Stack: corosync * Current DC: centos8-str2 (version 2.0.5-8.el8-ba59be7122) - partition with quorum * Last updated: Thu Mar 25 15:47:26 2021 * Last change: Thu Mar 25 15:47:08 2021 by root via cibadmin on centos8-str1 * 2 nodes configured * 8 resource instances configured Node List: * Online: [ centos8-str1 centos8-str2 ] Full List of Resources: * Resource Group: rg01: * VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str1 * ShareDir (ocf::heartbeat:Filesystem): Started centos8-str1 * MariaDB (systemd:mariadb): Started centos8-str1 * FS_DRBD0 (ocf::heartbeat:Filesystem): Started centos8-str1 * httpd (systemd:httpd): Started centos8-str1 * zabbix-server (systemd:zabbix-server): Started centos8-str1 * Clone Set: DRBD-clone [DRBD] (promotable): * Masters: [ centos8-str1 ] * Slaves: [ centos8-str2 ] Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled

[root@centos8-str1 ~]# pcs config showCluster Name: bigbang Corosync Nodes: centos8-str1 centos8-str2 Pacemaker Nodes: centos8-str1 centos8-str2 Resources: Group: rg01 Resource: VirtualIP (class=ocf provider=heartbeat type=IPaddr2) Attributes: cidr_netmask=24 ip=10.0.0.140 nic=ens192 Operations: monitor interval=60s on-fail=standby (VirtualIP-monitor-interval-60s) start interval=0s timeout=20s (VirtualIP-start-interval-0s) stop interval=0s timeout=20s (VirtualIP-stop-interval-0s) Resource: ShareDir (class=ocf provider=heartbeat type=Filesystem) Attributes: device=/dev/sdb1 directory=/mnt fstype=ext4 Operations: monitor interval=20s timeout=40s (ShareDir-monitor-interval-20s) start interval=0s timeout=60s (ShareDir-start-interval-0s) stop interval=0s timeout=60s (ShareDir-stop-interval-0s) Resource: MariaDB (class=systemd type=mariadb) Operations: monitor interval=60 timeout=100 (MariaDB-monitor-interval-60) start interval=0s timeout=100 (MariaDB-start-interval-0s) stop interval=0s timeout=100 (MariaDB-stop-interval-0s) Resource: FS_DRBD0 (class=ocf provider=heartbeat type=Filesystem) Attributes: device=/dev/drbd0 directory=/mnt2/drbd0 fstype=xfs Operations: monitor interval=20s timeout=40s (FS_DRBD0-monitor-interval-20s) start interval=0s timeout=60s (FS_DRBD0-start-interval-0s) stop interval=0s timeout=60s (FS_DRBD0-stop-interval-0s) Resource: httpd (class=systemd type=httpd) Operations: monitor interval=60 timeout=100 (httpd-monitor-interval-60) start interval=0s timeout=100 (httpd-start-interval-0s) stop interval=0s timeout=100 (httpd-stop-interval-0s) Resource: zabbix-server (class=systemd type=zabbix-server) Operations: monitor interval=60 timeout=100 (zabbix-server-monitor-interval-60) start interval=0s timeout=100 (zabbix-server-start-interval-0s) stop interval=0s timeout=100 (zabbix-server-stop-interval-0s) Clone: DRBD-clone Meta Attrs: clone-max=2 clone-node-max=1 master-max=1 master-node-max=1 notify=true promotable=true Resource: DRBD (class=ocf provider=linbit type=drbd) Attributes: drbd_resource=r0 Operations: demote interval=0s timeout=90 (DRBD-demote-interval-0s) monitor interval=20 role=Slave timeout=20 (DRBD-monitor-interval-20) monitor interval=10 role=Master timeout=20 (DRBD-monitor-interval-10) notify interval=0s timeout=90 (DRBD-notify-interval-0s) promote interval=0s timeout=90 (DRBD-promote-interval-0s) reload interval=0s timeout=30 (DRBD-reload-interval-0s) start interval=0s timeout=240 (DRBD-start-interval-0s) stop interval=0s timeout=100 (DRBD-stop-interval-0s) Stonith Devices: Fencing Levels: Location Constraints: Ordering Constraints: promote DRBD-clone then start rg01 (kind:Mandatory) (id:order-DRBD-clone-rg01-mandatory) promote DRBD-clone then start FS_DRBD0 (kind:Mandatory) (id:order-DRBD-clone-FS_DRBD0-mandatory) Colocation Constraints: DRBD-clone with rg01 (score:INFINITY) (rsc-role:Started) (with-rsc-role:Master) (id:colocation-DRBD-clone-rg01-INFINITY) DRBD-clone with FS_DRBD0 (score:INFINITY) (rsc-role:Started) (with-rsc-role:Master) (id:colocation-DRBD-clone-FS_DRBD0-INFINITY) Ticket Constraints: Alerts: No alerts defined Resources Defaults: No defaults set Operations Defaults: No defaults set Cluster Properties: cluster-infrastructure: corosync cluster-name: bigbang dc-version: 2.0.5-8.el8-ba59be7122 have-watchdog: false last-lrm-refresh: 1616654708 no-quorum-policy: ignore stonith-enabled: false Tags: No tags defined Quorum: Options:

●pacemakerの現在のリソースを破棄し、新たな順序でリソースを作成する(CentOS Stream 8)

参考URL:Pacemaker/Corosync の設定値について

pacemakerのリソースを下記のような順序で起動するように(停止はその逆)再設定します。

- DRBDr0

DRBDリソースr0をMaster/Slaveで設定 - FS_DRBD0

/dev/drbd0をxfs形式で/mnt2/drbd0ディレクトリへマウント。DRBDr0のMaster側で稼働 - ShareDir

/dev/sdb1をxfs形式で/mntディレクトリへマウント - MariaDB

FS_DRBD0でマウントされたデータベースを読み込んでMariaDBを起動。Active/Standby。DRBDr0のMaster側で稼働 - VirtualIP

仮想IPの割り当て。DRBDr0のMaster側で稼働 - Apache

httpdサービスを起動。Active/Standby。DRBDr0のMaster側で稼働 - Zabbix-Server

zabbix-serverサービスを起動。Active/Standby。DRBDr0のMaster側で稼働

新たな順序でリソースを作成するため、現在設定されているリソースを削除します。

[root@centos8-str1 ~]# pcs resource remove zabbix-server Attempting to stop: zabbix-server... Stopped [root@centos8-str1 ~]# pcs resource remove httpd Attempting to stop: httpd... Stopped [root@centos8-str1 ~]# pcs resource remove VirtualIP Attempting to stop: VirtualIP... Stopped [root@centos8-str1 ~]# pcs resource remove MariaDB Attempting to stop: MariaDB... Stopped [root@centos8-str1 ~]# pcs resource remove ShareDir Attempting to stop: ShareDir... Stopped [root@centos8-str1 ~]# pcs resource remove FS_DRBD0 Attempting to stop: FS_DRBD0... Stopped [root@centos8-str1 ~]# pcs resource remove DRBD_r0-clone Attempting to stop: DRBD... StoppedDRBD関連の設定は「●DRBDをpacemakerの現在のリソースに追加する」で設定済みです。

また、1回の障害検知でフェールオーバーするように設定しています。

# pcs resource defaults migration-threshold=1リソースを再作成します。

[root@centos8-str1 ~]# pcs resource create DRBD_r0 ocf:linbit:drbd drbd_resource=r0

[root@centos8-str1 ~]# pcs resource promotable DRBD_r0 master-max=1 master-node-max=1 clone-max=2 clone-node-max=1 notify=true

優先的にcentos8-str1がMasterとして起動するようにします。

スコア値が高いほど優先的に起動するようです。

-INFINITY<-100<0<100<INFINITY

[root@centos8-str1 ~]# pcs constraint location DRBD_r0-clone prefers centos8-str1=100

[root@centos8-str1 ~]# pcs resource cleanup DRBD_r0

[root@centos8-str1 ~]# pcs resource create FS_DRBD0 ocf:heartbeat:Filesystem \

device=/dev/drbd0 directory=/mnt2/drbd0 fstype=xfs --group zabbix-group

[root@centos8-str1 ~]# pcs resource create MariaDB systemd:mariadb --group zabbix-group

[root@centos8-str1 ~]# pcs resource create VirtualIP ocf:heartbeat:IPaddr2 ip=10.0.0.140 \

cidr_netmask=24 nic=ens192 op monitor interval=30s --group zabbix-group

[root@centos8-str1 ~]# pcs resource create httpd systemd:httpd --group zabbix-group

[root@centos8-str1 ~]# pcs resource create zabbix-server systemd:zabbix-server --group zabbix-group

[root@centos8-str1 ~]# pcs resource create ShareDir ocf:heartbeat:Filesystem \

device=/dev/sdb1 directory=/mnt fstype=ext4 --group zabbix-group

起動制約設定

## DRBD_r0がMaster側のノードでFS_DRBD0リソースを起動するように設定 [root@centos8-str1 ~]# pcs constraint colocation add DRBD_r0-clone with Master FS_DRBD0 ## DRBD_r0起動後にFS_DRBD0リソースを起動 [root@centos8-str1 ~]# pcs constraint order promote DRBD_r0-clone then start FS_DRBD0 Adding DRBD_r0-clone FS_DRBD0 (kind: Mandatory) (Options: first-action=promote then-action=start) ## zabbix-groupはDRBD_r0のMasterと同じノードで起動 [root@centos8-str1 ~]# pcs constraint colocation add zabbix-group with Master DRBD_r0-clone INFINITY ## MariaDBはDRBD_r0のMasterと同じノードで起動(異常検知で必要) [root@centos8-str1 ~]# pcs constraint colocation add master DRBD_r0 with MariaDB INFINITY --force [root@centos8-str1 ~]# pcs constraint colocation add master DRBD_r0-clone with MariaDB INFINITY ## VirtualIPはDRBD_r0のMasterと同じノードで起動(異常検知で必要) [root@centos8-str1 ~]# pcs constraint colocation add master DRBD_r0 with VirtualIP INFINITY --force [root@centos8-str1 ~]# pcs constraint colocation add master DRBD_r0-clone with VirtualIP INFINITY ## httpdはDRBD_r0のMasterと同じノードで起動(異常検知で必要) [root@centos8-str1 ~]# pcs constraint colocation add master DRBD_r0 with httpd INFINITY --force [root@centos8-str1 ~]# pcs constraint colocation add master DRBD_r0-clone with httpd INFINITY ## zabbix-serverはDRBD_r0のMasterと同じノードで起動(異常検知で必要) [root@centos8-str1 ~]# pcs constraint colocation add master DRBD_r0 with zabbix-server INFINITY --force [root@centos8-str1 ~]# pcs constraint colocation add master DRBD_r0-clone with zabbix-server INFINITY ## ShareDirはDRBD_r0のMasterと同じノードで起動(異常検知で必要):後日追加 [root@centos8-str1 ~]# pcs constraint colocation add master DRBD_r0 with ShareDir INFINITY --force [root@centos8-str1 ~]# pcs constraint colocation add master DRBD_r0-clone with ShareDir INFINITY [root@centos8-str1 ~]# pcs constraint colocation add master DRBD_r0-clone with ShareDir INFINITYクラスタの状態を確認します。

[root@centos8-str1 ~]# pcs statusconfigの状態を確認します。Cluster name: bigbang Cluster Summary: * Stack: corosync * Current DC: centos8-str1 (version 2.0.5-8.el8-ba59be7122) - partition with quorum * Last updated: Mon Mar 29 11:17:19 2021 * Last change: Mon Mar 29 11:10:05 2021 by root via cibadmin on centos8-str1 * 2 nodes configured * 8 resource instances configured Node List: * Online: [ centos8-str1 centos8-str2 ] Full List of Resources: * Clone Set: DRBD_r0-clone [DRBD_r0] (promotable): * Masters: [ centos8-str1 ] * Slaves: [ centos8-str2 ] * Resource Group: zabbix-group: * FS_DRBD0 (ocf::heartbeat:Filesystem): Started centos8-str1 * MariaDB (systemd:mariadb): Started centos8-str1 * VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str1 * httpd (systemd:httpd): Started centos8-str1 * zabbix-server (systemd:zabbix-server): Started centos8-str1 * ShareDir (ocf::heartbeat:Filesystem): Started centos8-str1 Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled

[root@centos8-str1 ~]# pcs config showCluster Name: bigbang Corosync Nodes: centos8-str1 centos8-str2 Pacemaker Nodes: centos8-str1 centos8-str2 Resources: Clone: DRBD_r0-clone Meta Attrs: clone-max=2 clone-node-max=1 master-max=1 master-node-max=1 notify=true promotable=true Resource: DRBD_r0 (class=ocf provider=linbit type=drbd) Attributes: drbd_resource=r0 Operations: demote interval=0s timeout=90 (DRBD_r0-demote-interval-0s) monitor interval=20 role=Slave timeout=20 (DRBD_r0-monitor-interval-20) monitor interval=10 role=Master timeout=20 (DRBD_r0-monitor-interval-10) notify interval=0s timeout=90 (DRBD_r0-notify-interval-0s) promote interval=0s timeout=90 (DRBD_r0-promote-interval-0s) reload interval=0s timeout=30 (DRBD_r0-reload-interval-0s) start interval=0s timeout=240 (DRBD_r0-start-interval-0s) stop interval=0s timeout=100 (DRBD_r0-stop-interval-0s) Group: zabbix-group Resource: FS_DRBD0 (class=ocf provider=heartbeat type=Filesystem) Attributes: device=/dev/drbd0 directory=/mnt2/drbd0 fstype=xfs Operations: monitor interval=20s timeout=40s (FS_DRBD0-monitor-interval-20s) start interval=0s timeout=60s (FS_DRBD0-start-interval-0s) stop interval=0s timeout=60s (FS_DRBD0-stop-interval-0s) Resource: MariaDB (class=systemd type=mariadb) Operations: monitor interval=60 timeout=100 (MariaDB-monitor-interval-60) start interval=0s timeout=100 (MariaDB-start-interval-0s) stop interval=0s timeout=100 (MariaDB-stop-interval-0s) Resource: VirtualIP (class=ocf provider=heartbeat type=IPaddr2) Attributes: cidr_netmask=24 ip=10.0.0.140 nic=ens192 Operations: monitor interval=30s (VirtualIP-monitor-interval-30s) start interval=0s timeout=20s (VirtualIP-start-interval-0s) stop interval=0s timeout=20s (VirtualIP-stop-interval-0s) Resource: httpd (class=systemd type=httpd) Operations: monitor interval=60 timeout=100 (httpd-monitor-interval-60) start interval=0s timeout=100 (httpd-start-interval-0s) stop interval=0s timeout=100 (httpd-stop-interval-0s) Resource: zabbix-server (class=systemd type=zabbix-server) Operations: monitor interval=60 timeout=100 (zabbix-server-monitor-interval-60) start interval=0s timeout=100 (zabbix-server-start-interval-0s) stop interval=0s timeout=100 (zabbix-server-stop-interval-0s) Resource: ShareDir (class=ocf provider=heartbeat type=Filesystem) Attributes: device=/dev/sdb1 directory=/mnt fstype=ext4 Operations: monitor interval=20s timeout=40s (ShareDir-monitor-interval-20s) start interval=0s timeout=60s (ShareDir-start-interval-0s) stop interval=0s timeout=60s (ShareDir-stop-interval-0s) Stonith Devices: Fencing Levels: Location Constraints: Ordering Constraints: promote DRBD_r0-clone then start FS_DRBD0 (kind:Mandatory) (id:order-DRBD_r0-clone-FS_DRBD0-mandatory) Colocation Constraints: DRBD_r0-clone with FS_DRBD0 (score:INFINITY) (rsc-role:Started) (with-rsc-role:Master) (id:colocation-DRBD_r0-clone-FS_DRBD0-INFINITY) FS_DRBD0 with zabbix-group (score:INFINITY) (rsc-role:Master) (with-rsc-role:Started) (id:colocation-FS_DRBD0-zabbix-group-INFINITY) DRBD_r0 with MariaDB (score:INFINITY) (rsc-role:Master) (with-rsc-role:Started) (id:colocation-DRBD_r0-MariaDB-INFINITY) DRBD_r0 with VirtualIP (score:INFINITY) (rsc-role:Master) (with-rsc-role:Started) (id:colocation-DRBD_r0-VirtualIP-INFINITY) DRBD_r0 with httpd (score:INFINITY) (rsc-role:Master) (with-rsc-role:Started) (id:colocation-DRBD_r0-httpd-INFINITY) DRBD_r0 with zabbix-server (score:INFINITY) (rsc-role:Master) (with-rsc-role:Started) (id:colocation-DRBD_r0-zabbix-server-INFINITY) Ticket Constraints: Alerts: No alerts defined Resources Defaults: Meta Attrs: rsc_defaults-meta_attributes migration-threshold=1 Operations Defaults: No defaults set Cluster Properties: cluster-infrastructure: corosync cluster-name: bigbang dc-version: 2.0.5-8.el8-ba59be7122 have-watchdog: false last-lrm-refresh: 1616983434 no-quorum-policy: ignore stonith-enabled: false Tags: No tags defined Quorum: Options:

プロセスを停止した場合(Apache)(CentOS Stream 8)

参考URL:HAクラスタをDRBDとPacemakerで作ってみよう [Pacemaker編]

httpdを停止します。

[root@centos8-str1 ~]# kill -kill `pgrep -f httpd` [root@centos8-str1 ~]# ps axu | grep http[d] (30秒ほど待ちます。) [root@centos8-str1 ~]# pcs status --fullkillされたhttpdがPacemakerにより再スタートされ、fail-countというカウンターがカウントされます。Cluster name: bigbang Cluster Summary: * Stack: corosync * Current DC: centos8-str1 (version 2.0.5-8.el8-ba59be7122) - partition with quorum * Last updated: Mon Mar 29 13:42:44 2021 * Last change: Mon Mar 29 13:24:34 2021 by hacluster via crmd on centos8-str2 * 2 nodes configured * 8 resource instances configured Node List: * Online: [ centos8-str1 (1) centos8-str2 (2) ] Full List of Resources: * Resource Group: rg01: * FS_DRBD0 (ocf::heartbeat:Filesystem): Started centos8-str1 * ShareDir (ocf::heartbeat:Filesystem): Started centos8-str1 * MariaDB (systemd:mariadb): Started centos8-str1 * VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str1 * httpd (systemd:httpd): Started centos8-str1 ← pacemakerによって新たに起動されています。 * zabbix-server (systemd:zabbix-server): Started centos8-str1 * Clone Set: DRBD-clone [DRBD] (promotable): * DRBD (ocf::linbit:drbd): Slave centos8-str2 * DRBD (ocf::linbit:drbd): Master centos8-str1 Node Attributes: * Node: centos8-str1 (1): * master-DRBD : 10000 * Node: centos8-str2 (2): * master-DRBD : 10000 Migration Summary: * Node: centos8-str1 (1): * httpd: migration-threshold=1000000 fail-count=1 last-failure='Mon Mar 29 13:42:10 2021' ↑ centos8-str1でhttpdにfail-countが加算されています。 Failed Resource Actions: ← centos8-str1でhttpdが起動していなかった事が記録されています。 * httpd_monitor_60000 on centos8-str1 'not running' (7): call=256, \ status='complete', exitreason='', last-rc-change='2021-03-29 13:42:10 +09:00', queued=0ms, exec=0ms Tickets: PCSD Status: centos8-str1: Online centos8-str2: Online Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled

fail-countがmigration-thresholdに到達すると、リソースは他のノードで起動します。

今回は制約を設定していますので、原因となるリソース以外も他のノードにフェールオーバーすることになります。

一度、このfail-countをリセットします。

[root@centos8-str1 ~]# pcs resource cleanup httpd今度は、migration-thresholdのデフォルト値を変更して、1回でもfail-countがカウントされるとフェールオーバーするようにします。

[root@centos8-str1 ~]# pcs resource defaults migration-threshold=1 Warning: This command is deprecated and will be removed. Please use 'pcs resource defaults update' instead. Warning: Defaults do not apply to resources which override them with their own defined values [root@centos8-str1 ~]# kill -kill `pgrep -f httpd` [root@centos8-str1 ~]# ps axu | grep http[d]他方のホスト(centos8-str2)でApacheを停止させ、元のホスト(centos8-str1)にフェールバックさせます。

[root@centos8-str2 ~]# pkill httpd [root@centos8-str2 ~]# ps axu | grep http[d] (30秒ほど待ちます。) [root@centos8-str2 ~]# pcs status --full元のホスト(centos8-str1)にフェールオーバーしていることが分かります。Cluster name: bigbang Cluster Summary: * Stack: corosync * Current DC: centos8-str1 (1) (version 2.0.5-8.el8-ba59be7122) - partition with quorum * Last updated: Mon Mar 29 13:49:59 2021 * Last change: Mon Mar 29 13:47:34 2021 by hacluster via crmd on centos8-str1 * 2 nodes configured * 8 resource instances configured Node List: * Online: [ centos8-str1 (1) centos8-str2 (2) ] Full List of Resources: * Clone Set: DRBD_r0-clone [DRBD_r0] (promotable): * DRBD_r0 (ocf::linbit:drbd): Master centos8-str1 * DRBD_r0 (ocf::linbit:drbd): Slave centos8-str2 * Resource Group: zabbix-group: * FS_DRBD0 (ocf::heartbeat:Filesystem): Started centos8-str1 * MariaDB (systemd:mariadb): Started centos8-str1 * VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str1 * httpd (systemd:httpd): Started centos8-str1 * zabbix-server (systemd:zabbix-server): Started centos8-str1 * ShareDir (ocf::heartbeat:Filesystem): Started centos8-str1 Node Attributes: * Node: centos8-str1 (1): * master-DRBD_r0 : 10000 * Node: centos8-str2 (2): * master-DRBD_r0 : 10000 Migration Summary: * Node: centos8-str2 (2): * httpd: migration-threshold=1 fail-count=1 last-failure='Mon Mar 29 13:49:25 2021' Failed Resource Actions: * httpd_monitor_60000 on centos8-str2 'not running' (7): call=134, status='complete', exitreason='', last-rc-change='2021-03-29 13:49:24 +09:00', queued=0ms, exec=0ms Tickets: PCSD Status: centos8-str1: Online centos8-str2: Online Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled

リソースをクリーンアップして綺麗な状態にしておきます。

[root@centos8-str1 ~]# pcs resource cleanup httpd

プロセスを停止した場合(MariaDB)(CentOS Stream 8)

参考URL:HAクラスタをDRBDとPacemakerで作ってみよう [Pacemaker編]

1回でもfail-countがカウントされるとフェールオーバーするように設定していない場合、migration-thresholdのデフォルト値(migration-threshold=1000000)を変更してフェールオーバーするようにします。

[root@centos8-str1 ~]# pcs resource defaults migration-threshold=1

MariaDBを停止します。

[root@centos8-str1 ~]# kill -kill `pgrep -f mysqld` [root@centos8-str1 ~]# ps axu | grep mysqld (30秒ほど待ちます。) [root@centos8-str1 ~]# pcs status --fullリソースが他方のホスト(centos8-str2)に移動していることが分かります。Cluster name: bigbang Cluster Summary: * Stack: corosync * Current DC: centos8-str1 (1) (version 2.0.5-8.el8-ba59be7122) - partition with quorum * Last updated: Tue Mar 30 09:40:05 2021 * Last change: Mon Mar 29 18:08:20 2021 by hacluster via crmd on centos8-str2 * 2 nodes configured * 8 resource instances configured Node List: * Online: [ centos8-str1 (1) centos8-str2 (2) ] Full List of Resources: * Clone Set: DRBD_r0-clone [DRBD_r0] (promotable): * DRBD_r0 (ocf::linbit:drbd): Slave centos8-str1 * DRBD_r0 (ocf::linbit:drbd): Master centos8-str2 * Resource Group: zabbix-group: * FS_DRBD0 (ocf::heartbeat:Filesystem): Started centos8-str2 * MariaDB (systemd:mariadb): Started centos8-str2 * VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str2 * httpd (systemd:httpd): Started centos8-str2 * zabbix-server (systemd:zabbix-server): Started centos8-str2 * ShareDir (ocf::heartbeat:Filesystem): Started centos8-str2 Node Attributes: * Node: centos8-str1 (1): * master-DRBD_r0 : 10000 * Node: centos8-str2 (2): * master-DRBD_r0 : 10000 Migration Summary: * Node: centos8-str1 (1): * MariaDB: migration-threshold=1 fail-count=1 last-failure='Tue Mar 30 09:39:21 2021' Failed Resource Actions: * MariaDB_monitor_60000 on centos8-str1 'not running' (7): call=40, status='complete', exitreason='', last-rc-change='2021-03-30 09:39:21 +09:00', queued=0ms, exec=0ms Tickets: PCSD Status: centos8-str1: Online centos8-str2: Online Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled

エラーをクリーンアップし、フェールバックさせます(下記は正常にフェールバックしないパターンです(2021.03.30現在)。)。

[root@centos8-str2 ~]# pcs resource cleanup MariaDB [root@centos8-str2 ~]# kill -kill `pgrep -f mysqld` [root@centos8-str2 ~]# ps axu | grep mysqld (30秒ほど待ちます。) [root@centos8-str2 ~]# pcs status --full;date確認するとZabbix Serverのプロセスが残っています。Cluster name: bigbang Cluster Summary: * Stack: corosync * Current DC: centos8-str1 (1) (version 2.0.5-8.el8-ba59be7122) - partition with quorum * Last updated: Tue Mar 30 10:02:50 2021 * Last change: Tue Mar 30 09:42:57 2021 by hacluster via crmd on centos8-str1 * 2 nodes configured * 8 resource instances configured (1 BLOCKED from further action due to failure) Node List: * Online: [ centos8-str1 (1) centos8-str2 (2) ] Full List of Resources: * Clone Set: DRBD_r0-clone [DRBD_r0] (promotable): * DRBD_r0 (ocf::linbit:drbd): Slave centos8-str1 * DRBD_r0 (ocf::linbit:drbd): Master centos8-str2 * Resource Group: zabbix-group: * FS_DRBD0 (ocf::heartbeat:Filesystem): Started centos8-str2 * MariaDB (systemd:mariadb): FAILED centos8-str2 * VirtualIP (ocf::heartbeat:IPaddr2): Stopped * httpd (systemd:httpd): Stopped * zabbix-server (systemd:zabbix-server): FAILED centos8-str2 (blocked) * ShareDir (ocf::heartbeat:Filesystem): Stopped Node Attributes: * Node: centos8-str1 (1): * master-DRBD_r0 : 10000 * Node: centos8-str2 (2): * master-DRBD_r0 : 10000 Migration Summary: * Node: centos8-str2 (2): * MariaDB: migration-threshold=1 fail-count=1 last-failure='Tue Mar 30 09:44:32 2021' * zabbix-server: migration-threshold=1 fail-count=1000000 last-failure='Tue Mar 30 09:46:14 2021' Failed Resource Actions: * MariaDB_monitor_60000 on centos8-str2 'not running' (7): call=46, status='complete', exitreason='', last-rc-change='2021-03-30 09:44:32 +09:00', queued=0ms, exec=0ms * zabbix-server_stop_0 on centos8-str2 'OCF_TIMEOUT' (198): call=64, status='Timed Out', exitreason='', last-rc-change='2021-03-30 09:46:14 +09:00', queued=0ms, exec=99987ms Tickets: PCSD Status: centos8-str1: Online centos8-str2: Online Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled

下記コマンドを実行しましたが、暫くしても反応がないため Ctrl + C で抜けました。

[root@centos8-str2 ~]# systemctl stop zabbix-server ^Cその後、下記コマンドを実施したところZabbix Serverの全てのプロセスがなくなりました。

[root@centos8-str2 ~]# kill -kill `pgrep -f zabbix_server`この時点では、まだ、フェールバックしておらず障害状態のままです。

とりあえず記録されているFailed Resource Actionsをクリーンアップします。

[root@centos8-str2 ~]# pcs resource cleanup MariaDB [root@centos8-str2 ~]# pcs resource cleanup zabbix-server [root@centos8-str2 ~]# pcs constraint colocation add MariaDB with zabbix-server INFINITY [root@centos8-str2 ~]# pcs config showこれにより障害は回復しましたが、リソースが移動することはありませんでした。Cluster Name: bigbang Corosync Nodes: centos8-str1 centos8-str2 Pacemaker Nodes: centos8-str1 centos8-str2 Resources: Clone: DRBD_r0-clone Meta Attrs: clone-max=2 clone-node-max=1 master-max=1 master-node-max=1 notify=true promotable=true Resource: DRBD_r0 (class=ocf provider=linbit type=drbd) Attributes: drbd_resource=r0 Operations: demote interval=0s timeout=90 (DRBD_r0-demote-interval-0s) monitor interval=20 role=Slave timeout=20 (DRBD_r0-monitor-interval-20) monitor interval=10 role=Master timeout=20 (DRBD_r0-monitor-interval-10) notify interval=0s timeout=90 (DRBD_r0-notify-interval-0s) promote interval=0s timeout=90 (DRBD_r0-promote-interval-0s) reload interval=0s timeout=30 (DRBD_r0-reload-interval-0s) start interval=0s timeout=240 (DRBD_r0-start-interval-0s) stop interval=0s timeout=100 (DRBD_r0-stop-interval-0s) Group: zabbix-group Resource: FS_DRBD0 (class=ocf provider=heartbeat type=Filesystem) Attributes: device=/dev/drbd0 directory=/mnt2/drbd0 fstype=xfs Operations: monitor interval=20s timeout=40s (FS_DRBD0-monitor-interval-20s) start interval=0s timeout=60s (FS_DRBD0-start-interval-0s) stop interval=0s timeout=60s (FS_DRBD0-stop-interval-0s) Resource: MariaDB (class=systemd type=mariadb) Operations: monitor interval=60 timeout=100 (MariaDB-monitor-interval-60) start interval=0s timeout=100 (MariaDB-start-interval-0s) stop interval=0s timeout=100 (MariaDB-stop-interval-0s) Resource: VirtualIP (class=ocf provider=heartbeat type=IPaddr2) Attributes: cidr_netmask=24 ip=10.0.0.140 nic=ens192 Operations: monitor interval=30s (VirtualIP-monitor-interval-30s) start interval=0s timeout=20s (VirtualIP-start-interval-0s) stop interval=0s timeout=20s (VirtualIP-stop-interval-0s) Resource: httpd (class=systemd type=httpd) Operations: monitor interval=60 timeout=100 (httpd-monitor-interval-60) start interval=0s timeout=100 (httpd-start-interval-0s) stop interval=0s timeout=100 (httpd-stop-interval-0s) Resource: zabbix-server (class=systemd type=zabbix-server) Operations: monitor interval=60 timeout=100 (zabbix-server-monitor-interval-60) start interval=0s timeout=100 (zabbix-server-start-interval-0s) stop interval=0s timeout=100 (zabbix-server-stop-interval-0s) Resource: ShareDir (class=ocf provider=heartbeat type=Filesystem) Attributes: device=/dev/sdb1 directory=/mnt fstype=ext4 Operations: monitor interval=20s timeout=40s (ShareDir-monitor-interval-20s) start interval=0s timeout=60s (ShareDir-start-interval-0s) stop interval=0s timeout=60s (ShareDir-stop-interval-0s) Stonith Devices: Fencing Levels: Location Constraints: Ordering Constraints: promote DRBD_r0-clone then start FS_DRBD0 (kind:Mandatory) (id:order-DRBD_r0-clone-FS_DRBD0-mandatory) Colocation Constraints: DRBD_r0-clone with FS_DRBD0 (score:INFINITY) (rsc-role:Started) (with-rsc-role:Master) (id:colocation-DRBD_r0-clone-FS_DRBD0-INFINITY) FS_DRBD0 with zabbix-group (score:INFINITY) (rsc-role:Master) (with-rsc-role:Started) (id:colocation-FS_DRBD0-zabbix-group-INFINITY) DRBD_r0 with MariaDB (score:INFINITY) (rsc-role:Master) (with-rsc-role:Started) (id:colocation-DRBD_r0-MariaDB-INFINITY) DRBD_r0 with VirtualIP (score:INFINITY) (rsc-role:Master) (with-rsc-role:Started) (id:colocation-DRBD_r0-VirtualIP-INFINITY) DRBD_r0 with httpd (score:INFINITY) (rsc-role:Master) (with-rsc-role:Started) (id:colocation-DRBD_r0-httpd-INFINITY) DRBD_r0 with zabbix-server (score:INFINITY) (rsc-role:Master) (with-rsc-role:Started) (id:colocation-DRBD_r0-zabbix-server-INFINITY) DRBD_r0 with ShareDir (score:INFINITY) (rsc-role:Master) (with-rsc-role:Started) (id:colocation-DRBD_r0-ShareDir-INFINITY) MariaDB with zabbix-server (score:INFINITY) (id:colocation-MariaDB-zabbix-server-INFINITY) Ticket Constraints: Alerts: No alerts defined Resources Defaults: Meta Attrs: rsc_defaults-meta_attributes migration-threshold=1 Operations Defaults: No defaults set Cluster Properties: cluster-infrastructure: corosync cluster-name: bigbang dc-version: 2.0.5-8.el8-ba59be7122 have-watchdog: false last-lrm-refresh: 1617067515 no-quorum-policy: ignore stonith-enabled: false Tags: No tags defined Quorum: Options:

Zabbix ServerがFAILED centos8-str2 (blocked)となる前(timeout前)に、残存しているZabbix Serverのプロセスを全てkillすることでリソースが移動できることを確認しました。

2021.03.31現在、Pacemaker単独でこれを解決する方法が見つからなかったため、cronで1分毎にMariaDBのプロセスがなくなったこと(MariaDBの停止)を検知したら、強制的にZabbix Serverのプロセスを全てkillする方法としました。

下記に記載していますが、Zabbix Serverのプロセス停止時には正常にリソースが移動することを利用することとしました。

プロセスを停止した場合(Zabbix Server)(CentOS Stream 8)

参考URL:HAクラスタをDRBDとPacemakerで作ってみよう [Pacemaker編]

1回でもfail-countがカウントされるとフェールオーバーするように設定していない場合、migration-thresholdのデフォルト値(migration-threshold=1000000)を変更してフェールオーバーするようにします。

[root@centos8-str1 ~]# pcs resource defaults migration-threshold=1

Zabbix Serverを停止します。

[root@centos8-str1 ~]# kill -kill `pgrep -f zabbix_server` [root@centos8-str1 ~]# ps axu | grep zabbix_server (30秒ほど待ちます。) [root@centos8-str1 ~]# pcs status --fullリソースが他方のホスト(centos8-str2)に移動していることが分かります。Cluster name: bigbang Cluster Summary: * Stack: corosync * Current DC: centos8-str1 (1) (version 2.0.5-8.el8-ba59be7122) - partition with quorum * Last updated: Mon Mar 29 17:41:43 2021 * Last change: Mon Mar 29 17:39:12 2021 by hacluster via crmd on centos8-str2 * 2 nodes configured * 8 resource instances configured Node List: * Online: [ centos8-str1 (1) centos8-str2 (2) ] Full List of Resources: * Clone Set: DRBD_r0-clone [DRBD_r0] (promotable): * DRBD_r0 (ocf::linbit:drbd): Slave centos8-str1 * DRBD_r0 (ocf::linbit:drbd): Master centos8-str2 * Resource Group: zabbix-group: * FS_DRBD0 (ocf::heartbeat:Filesystem): Started centos8-str2 * MariaDB (systemd:mariadb): Started centos8-str2 * VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str2 * httpd (systemd:httpd): Started centos8-str2 * zabbix-server (systemd:zabbix-server): Started centos8-str2 * ShareDir (ocf::heartbeat:Filesystem): Started centos8-str2 Node Attributes: * Node: centos8-str1 (1): * master-DRBD_r0 : 10000 * Node: centos8-str2 (2): * master-DRBD_r0 : 10000 Migration Summary: * Node: centos8-str1 (1): * zabbix-server: migration-threshold=1 fail-count=1 last-failure='Mon Mar 29 17:41:02 2021' Failed Resource Actions: * zabbix-server_monitor_60000 on centos8-str1 'not running' (7): call=298, status='complete', exitreason='', last-rc-change='2021-03-29 17:41:02 +09:00', queued=0ms, exec=0ms Tickets: PCSD Status: centos8-str1: Online centos8-str2: Online Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled

エラーをクリーンアップし、フェールオーバーさせます。

[root@centos8-str2 ~]# pcs resource cleanup zabbix-server [root@centos8-str2 ~]# kill -kill `pgrep -f zabbix_server` [root@centos8-str2 ~]# ps axu | grep zabbix_server (30秒ほど待ちます。) [root@centos8-str2 ~]# pcs status --fullCluster name: bigbang Cluster Summary: * Stack: corosync * Current DC: centos8-str1 (1) (version 2.0.5-8.el8-ba59be7122) - partition with quorum * Last updated: Mon Mar 29 17:46:45 2021 * Last change: Mon Mar 29 17:45:27 2021 by hacluster via crmd on centos8-str1 * 2 nodes configured * 8 resource instances configured Node List: * Online: [ centos8-str1 (1) centos8-str2 (2) ] Full List of Resources: * Clone Set: DRBD_r0-clone [DRBD_r0] (promotable): * DRBD_r0 (ocf::linbit:drbd): Master centos8-str1 * DRBD_r0 (ocf::linbit:drbd): Slave centos8-str2 * Resource Group: zabbix-group: * FS_DRBD0 (ocf::heartbeat:Filesystem): Started centos8-str1 * MariaDB (systemd:mariadb): Started centos8-str1 * VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str1 * httpd (systemd:httpd): Started centos8-str1 * zabbix-server (systemd:zabbix-server): Started centos8-str1 * ShareDir (ocf::heartbeat:Filesystem): Started centos8-str1 Node Attributes: * Node: centos8-str1 (1): * master-DRBD_r0 : 10000 * Node: centos8-str2 (2): * master-DRBD_r0 : 10000 Migration Summary: * Node: centos8-str2 (2): * zabbix-server: migration-threshold=1 fail-count=1 last-failure='Mon Mar 29 17:46:14 2021' Failed Resource Actions: * zabbix-server_monitor_60000 on centos8-str2 'not running' (7): call=304, status='complete', exitreason='', last-rc-change='2021-03-29 17:46:14 +09:00', queued=0ms, exec=0ms Tickets: PCSD Status: centos8-str1: Online centos8-str2: Online Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled/リソースをクリーンアップして綺麗な状態にしておきます。[root@centos8-str2 ~]# pcs resource cleanup zabbix-server

NIC障害(VirtualIP)(CentOS Stream 8)

参考URL:HAクラスタをDRBDとPacemakerで作ってみよう [Pacemaker編]

1回でもfail-countがカウントされるとフェールオーバーするように設定していない場合、migration-thresholdのデフォルト値(migration-threshold=1000000)を変更してフェールオーバーするようにします。[root@centos8-str1 ~]# pcs resource defaults migration-threshold=1

VMware Host Clientにログインし、ホスト(centos8-str1)のネットワークアダプタ(VM Network (接続済み))を切断してVirtualIPを停止します。(30秒ほど待ちます。) [root@centos8-str2 ~]# pcs status --full;dateエラーをクリーンアップします。Cluster name: bigbang Cluster Summary: * Stack: corosync * Current DC: centos8-str1 (1) (version 2.0.5-8.el8-ba59be7122) - partition with quorum * Last updated: Mon Mar 29 14:56:56 2021 * Last change: Mon Mar 29 14:42:29 2021 by hacluster via crmd on centos8-str2 * 2 nodes configured * 8 resource instances configured Node List: * Online: [ centos8-str1 (1) centos8-str2 (2) ] Full List of Resources: * Clone Set: DRBD_r0-clone [DRBD_r0] (promotable): * DRBD_r0 (ocf::linbit:drbd): Slave centos8-str1 * DRBD_r0 (ocf::linbit:drbd): Master centos8-str2 * Resource Group: zabbix-group: * FS_DRBD0 (ocf::heartbeat:Filesystem): Started centos8-str2 * MariaDB (systemd:mariadb): Started centos8-str2 * VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str2 * httpd (systemd:httpd): Started centos8-str2 * zabbix-server (systemd:zabbix-server): Started centos8-str2 * ShareDir (ocf::heartbeat:Filesystem): Started centos8-str2 Node Attributes: * Node: centos8-str1 (1): * master-DRBD_r0 : 10000 * Node: centos8-str2 (2): * master-DRBD_r0 : 10000 Migration Summary: * Node: centos8-str1 (1): * VirtualIP: migration-threshold=1 fail-count=1 last-failure='Mon Mar 29 14:55:16 2021' Failed Resource Actions: * VirtualIP_monitor_30000 on centos8-str1 'not running' (7): call=173, status='complete', exitreason='', last-rc-change='2021-03-29 14:55:16 +09:00', queued=0ms, exec=0ms Tickets: PCSD Status: centos8-str1: Offline centos8-str2: Online Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled[root@centos8-str2 ~]# pcs resource cleanup VirtualIPVMware Host Clientにログインし、ホスト(centos8-str1)のネットワークアダプタ(VM Network)を作成しなおします。

ホスト(centos8-str2)のネットワークアダプタ(VM Network (接続済み))を切断しフェールバックさせます。(30秒ほど待ちます。) [root@centos8-str1 ~]# pcs status --fullフェールオーバーしていることが分かります。Cluster name: bigbang Cluster Summary: * Stack: corosync * Current DC: centos8-str1 (1) (version 2.0.5-8.el8-ba59be7122) - partition with quorum * Last updated: Mon Mar 29 15:14:32 2021 * Last change: Mon Mar 29 15:07:40 2021 by hacluster via crmd on centos8-str1 * 2 nodes configured * 8 resource instances configured Node List: * Online: [ centos8-str1 (1) centos8-str2 (2) ] Full List of Resources: * Clone Set: DRBD_r0-clone [DRBD_r0] (promotable): * DRBD_r0 (ocf::linbit:drbd): Master centos8-str1 * DRBD_r0 (ocf::linbit:drbd): Slave centos8-str2 * Resource Group: zabbix-group: * FS_DRBD0 (ocf::heartbeat:Filesystem): Started centos8-str1 * MariaDB (systemd:mariadb): Started centos8-str1 * VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str1 * httpd (systemd:httpd): Started centos8-str1 * zabbix-server (systemd:zabbix-server): Started centos8-str1 * ShareDir (ocf::heartbeat:Filesystem): Started centos8-str1 Node Attributes: * Node: centos8-str1 (1): * master-DRBD_r0 : 10000 * Node: centos8-str2 (2): * master-DRBD_r0 : 10000 Migration Summary: * Node: centos8-str2 (2): * VirtualIP: migration-threshold=1 fail-count=1 last-failure='Mon Mar 29 15:14:02 2021' Failed Resource Actions: * VirtualIP_monitor_30000 on centos8-str2 'not running' (7): call=179, status='complete', exitreason='', last-rc-change='2021-03-29 15:14:02 +09:00', queued=0ms, exec=0ms Tickets: PCSD Status: centos8-str1: Online centos8-str2: Offline Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled

リソースをクリーンアップして綺麗な状態にしておきます。[root@centos8-str1 ~]# pcs resource cleanup VirtualIP

プロセスを停止した場合(ShareDir)(CentOS Stream 8)

参考URL:HAクラスタをDRBDとPacemakerで作ってみよう [Pacemaker編]

1回でもfail-countがカウントされるとフェールオーバーするように設定していない場合、migration-thresholdのデフォルト値(migration-threshold=1000000)を変更してフェールオーバーするようにします。[root@centos8-str1 ~]# pcs resource defaults migration-threshold=1

ホスト(centos8-str1)のShareDirリソースのマウントを解除します。[root@centos8-str1 ~]# umount /mnt (30秒ほど待ちます。) [root@centos8-str1 ~]# pcs status --full全てのリソースが他方のホスト(centos8-str2)に移動していることが分かります。Cluster name: bigbang Cluster Summary: * Stack: corosync * Current DC: centos8-str1 (1) (version 2.0.5-8.el8-ba59be7122) - partition with quorum * Last updated: Mon Mar 29 16:28:17 2021 * Last change: Mon Mar 29 16:25:19 2021 by root via cibadmin on centos8-str1 * 2 nodes configured * 8 resource instances configured Node List: * Online: [ centos8-str1 (1) centos8-str2 (2) ] Full List of Resources: * Clone Set: DRBD_r0-clone [DRBD_r0] (promotable): * DRBD_r0 (ocf::linbit:drbd): Slave centos8-str1 * DRBD_r0 (ocf::linbit:drbd): Master centos8-str2 * Resource Group: zabbix-group: * FS_DRBD0 (ocf::heartbeat:Filesystem): Started centos8-str2 * MariaDB (systemd:mariadb): Started centos8-str2 * VirtualIP (ocf::heartbeat:IPaddr2): Started centos8-str2 * httpd (systemd:httpd): Started centos8-str2 * zabbix-server (systemd:zabbix-server): Started centos8-str2 * ShareDir (ocf::heartbeat:Filesystem): Started centos8-str2 Node Attributes: * Node: centos8-str1 (1): * master-DRBD_r0 : 10000 * Node: centos8-str2 (2): * master-DRBD_r0 : 10000 Migration Summary: * Node: centos8-str1 (1): * ShareDir: migration-threshold=1 fail-count=1 last-failure='Mon Mar 29 16:26:48 2021' Failed Resource Actions: * ShareDir_monitor_20000 on centos8-str1 'not running' (7): call=218, status='complete', exitreason='', last-rc-change='2021-03-29 16:26:48 +09:00', queued=0ms, exec=0ms Tickets: PCSD Status: centos8-str1: Online centos8-str2: Online Daemon Status: corosync: active/disabled pacemaker: active/disabled pcsd: active/enabled